Did you find this article helpful for what you want to achieve, learn, or to expand your possibilities? Share your feelings with our editorial team.

Nov 14, 2024

TECH & DESIGNAI Quality Assurance: The Cornerstone of Socially Acceptable AI Development

Building internal systems that align with societal expectations to ensure accountability for AI quality risks

The integration of AI into everyday products has become increasingly prevalent. While these AI-powered products undoubtedly enhance our lives and work, are we adequately addressing the associated risks alongside the expected improvements in convenience?

What exactly are the risks associated with AI?

How should we respond when accidents or errors occur?

How can we develop products with these risks in mind?

Without addressing these questions, AI-powered products may struggle to gain acceptance in society—particularly in areas such as mobility, where human lives are at stake. Recognizing this, the global push to establish AI standards is gaining momentum.

At DENSO, under the leadership of the Software Function Unit and with extensive cooperation from the Quality Control Division and business units developing AI-powered products, we have initiated AI Quality Assurance efforts. We are promoting activities aimed at creating a society where AI-powered products can be used more safely.

Contents of this article

How Much Can We Rely on AI?

As AI-powered products proliferate across all aspects of our society, they promise to bring new possibilities and improve convenience in daily life and business.

For instance, you may have noticed an increase in people using generative AI for information gathering or brainstorming new ideas. Perhaps you had the experience of making restaurant or hospital appointments through dialogue with AI-powered automated voice systems.

Whether consciously aware of it or not, AI has permeated various aspects of our lives. AI-powered products and services are being introduced into social infrastructure sectors—including transportation, finance, electricity, gas, and telecommunications.

With this growing reliance comes a set of concerns. What happens if AI, now embedded into critical societal systems, fails? Could such a failure trigger a major incident or accident?

Given AI’s profound impact, it is imperative to establish standards and rules for managing these risks. However, the pace of technological innovation surrounding AI is rapid, and with various players across nations and companies engaging in its development, standards and rules regarding AI quality are still evolving.

While traditional software functions are defined by human instructions, AI operates based on data, making it capable of producing unexpected outcomes depending on its learning environment. The societal trends surrounding AI and its technical challenges are significant factors in ensuring quality and creating mechanisms for quality assurance.

Rulemaking to Enhance Social Acceptance of AI

For AI-powered products to gain social acceptance, AI quality must be guaranteed. To achieve this, it's crucial to define a process for developing AI while ensuring quality, precisely because its behavior is determined by data, and to conduct development according to this process.

In recent years, numerous standards and guidelines about AI quality have been published by countries worldwide. For instance, the National Institute of Standards and Technology (NIST) released the AI Risk Management Framework (AI RMF) in October 2023, while the Consortium of Quality Assurance for Artificial-Intelligence-based products and services (QA4AI) has introduced the QA4AI Guidelines. These developments signal a concerted effort to establish rules for the societal acceptance of AI.

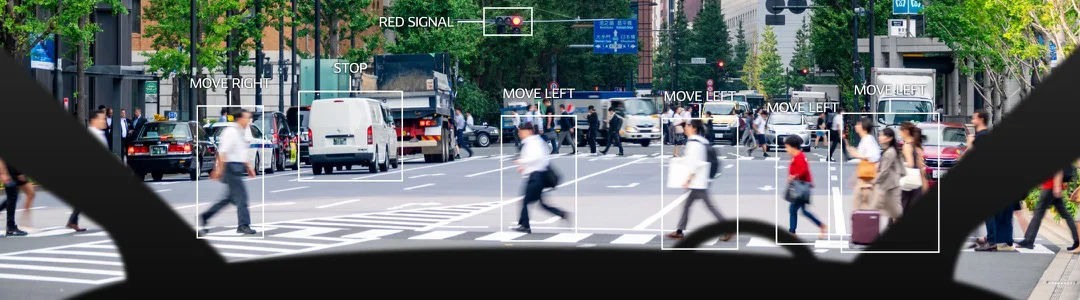

In the automotive industry, where the highest levels of safety are demanded due to the responsibility of safeguarding human lives, the development of AI-powered vehicle products is advancing. Examples include systems such as collision damage mitigation and prevention brakes, as well as features that monitor driver conditions, such as detecting drowsiness or distracted driving. As AI-powered products like autonomous driving systems become more widespread, new regulations tailored to these technological advancements will inevitably be required.

Safety has always been a priority in traditional automotive standards. However, with the growing adoption of autonomous driving systems, a new international standard, ISO 21448*1, was established in 2022 to enhance the safety of complex systems that include sensors and AI. This standard is now being implemented, particularly by automobile manufacturers.

Furthermore, international discussions on ensuring the quality and safety of AI in automotive products are gaining momentum, with preparations underway to release a new safety standard designed specifically for AI vehicle systems, ISO/PAS 8800*2.

※1

ISO 21448 (Safety of the intended functionality): An international standard that provides a framework and guidance for design and verification approaches to reduce and avoid risks of accidents due to inadequate performance/specifications of intended functions realized by sensors, algorithms, actuators, and electrical/electronic systems, and misuse by users.

※2

ISO/PAS 8800 (Safety and artificial intelligence): A publicly available specification on AI safety scheduled for publication in 2024. It describes AI safety attributes, risk factors, development and operation lifecycle, data quality, and evidence necessary to argue for AI safety.

DENSO's AI Quality Assurance Activities

Ensuring AI quality requires more than just setting safety standards. To develop cars and services with guaranteed AI quality, companies must actively promote their own AI quality assurance activities.

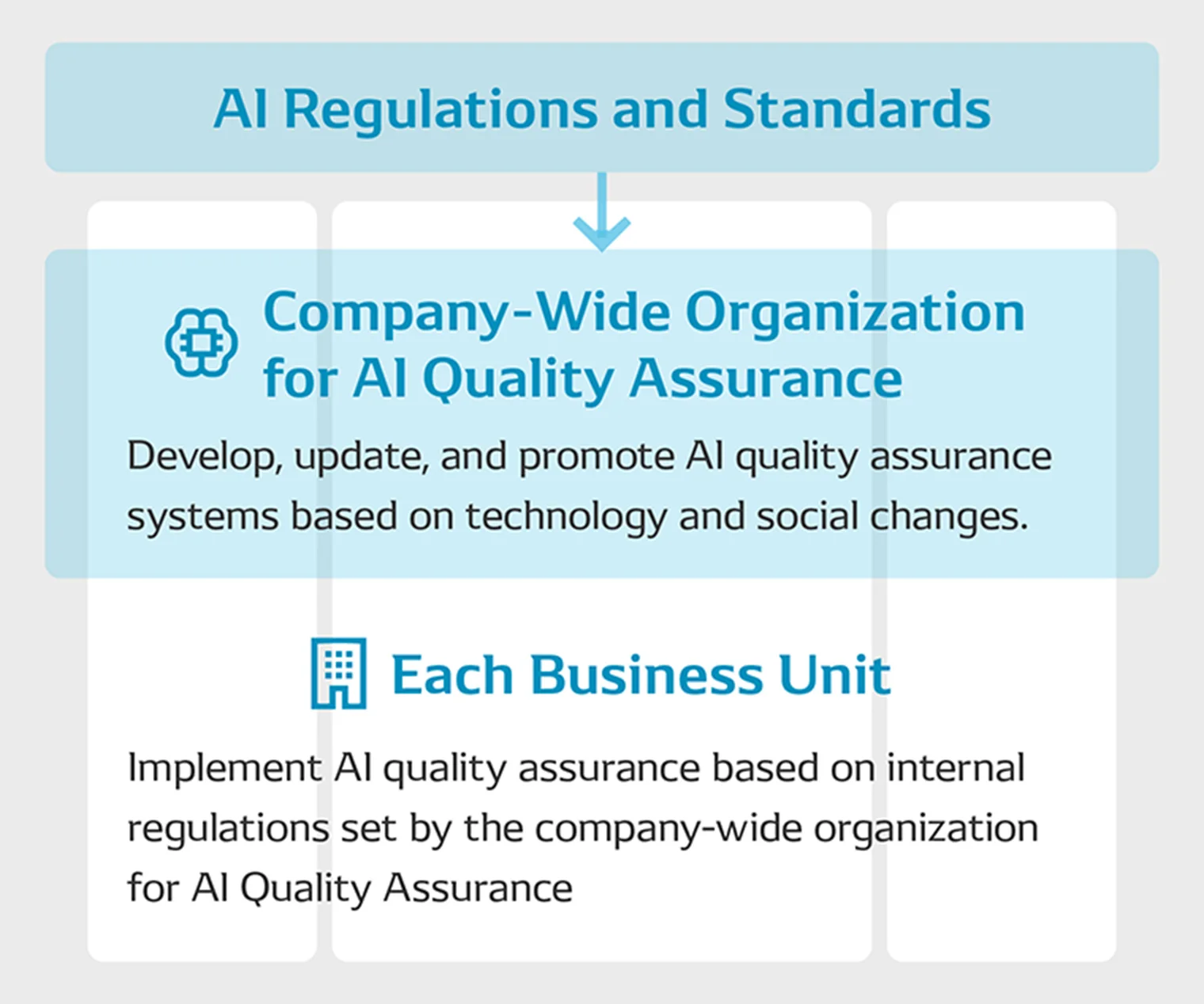

DENSO not only participates in various international standardization activities but also implements its own in-house initiatives for AI quality assurance. This involves developing a comprehensive AI quality assurance system across the entire company.

To build this system, DENSO's Quality Control Division collaborates closely with business units responsible for developing AI-powered products. Our efforts include creating internal standards based on global trends and AI’s societal acceptance, advancing technologies related to AI quality, and supporting the application of these advancements in actual products. Through these initiatives, we aim to ensure AI quality that aligns with global standards across the entire company.

Hiroshi Kuwajima from the Software Function Unit explains how this initiative began:

"Previously, individual divisions developing AI-powered products were responsible for their own quality assurance processes. Now, as we move forward, we are coordinating across the company to create a unified AI quality assurance system. This enables us to swiftly adapt to changes in the external environment, working closely with the Quality Control Division and divisions developing AI-powered products to share information and build a company-wide process for AI development and quality assurance."

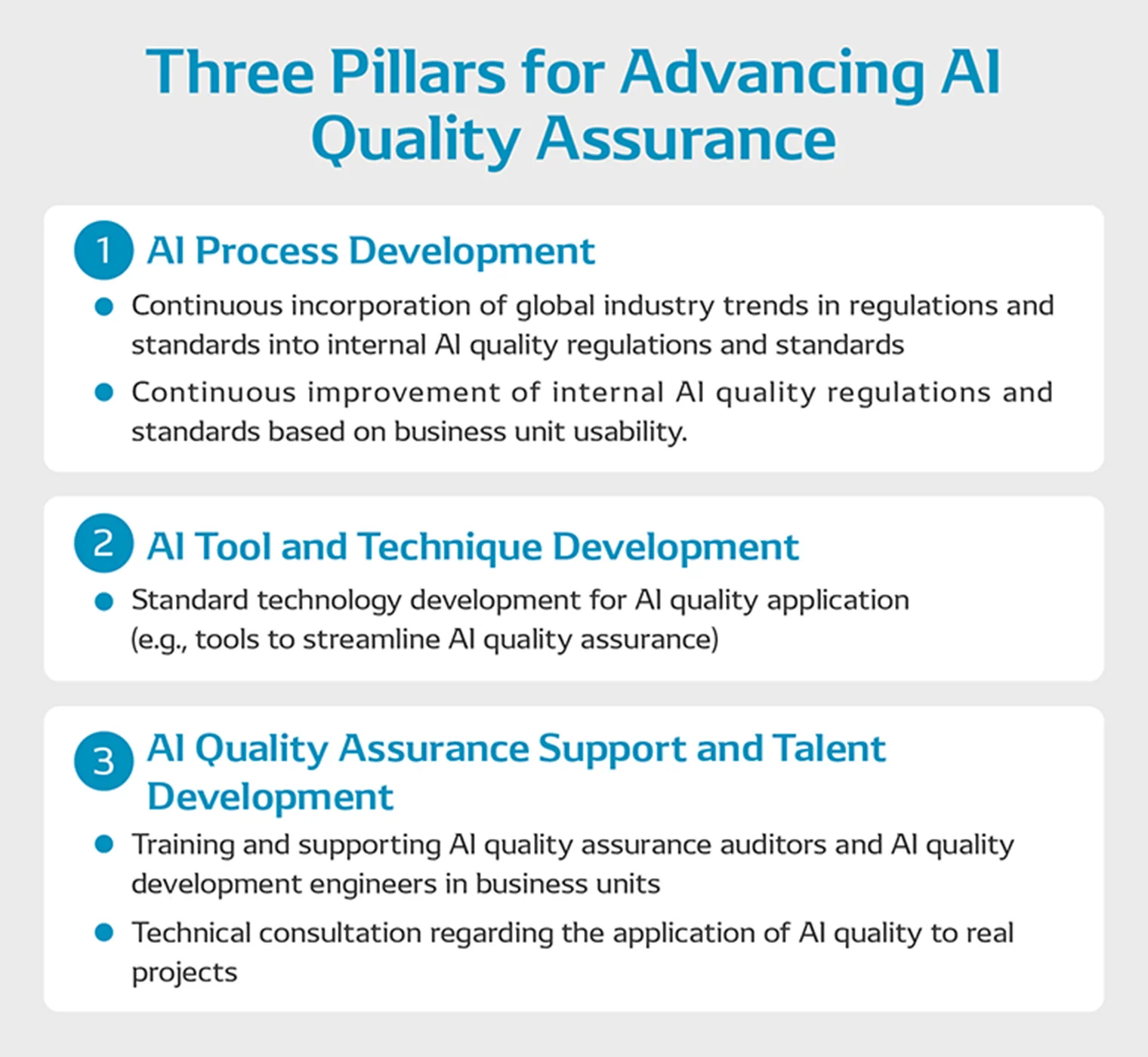

In DENSO, there are three pillars for advancing AI quality assurance company-wide: AI Process Development, AI Tool and Technique Development, and AI Quality Assurance Support and Talent Development.

Our primary focus is establishing a robust AI quality assurance framework through two key initiatives. First, we are developing AI Process Development, which aims to create and continually refine AI development and quality assurance processes aligned with international regulations and guidelines. Secondly, our AI Tool and Technique Development initiative focuses on creating standard technology for AI quality applications, including tools to streamline AI quality assurance.

Building on these foundations, we will provide AI Quality Assurance Support and Talent Development to assist various business units in implementing effective AI quality assurance measures.

Next, we examine the specific initiatives for each of these areas.

Promoting Activities to Implement Quality Assurance for AI-powered Products Across the Company

AI Process Development

To begin with, we'll focus on AI Process Development. In today’s landscape of AI-powered product development, meeting regulatory requirements is essential. At DENSO, we are establishing our own AI quality assurance support criteria to ensure these demands are met.

In doing so, we have drawn on a range of sources, including the EU's proposed AI regulatory framework, international standards for AI risk management, and guidelines such as the Machine Learning Quality Management Guideline released by National Institute of Advanced Industrial Science and Technology (AIST). Tetsuya Nakagami, of DENSO's Software Function Unit, elaborates on the development process:

“When creating these regulations, we referred to the IT industry's guidelines on Quality Management in AI Development. We also consulted academic papers on the process of AI development and quality assurance within the context of autonomous driving in the automotive industry.

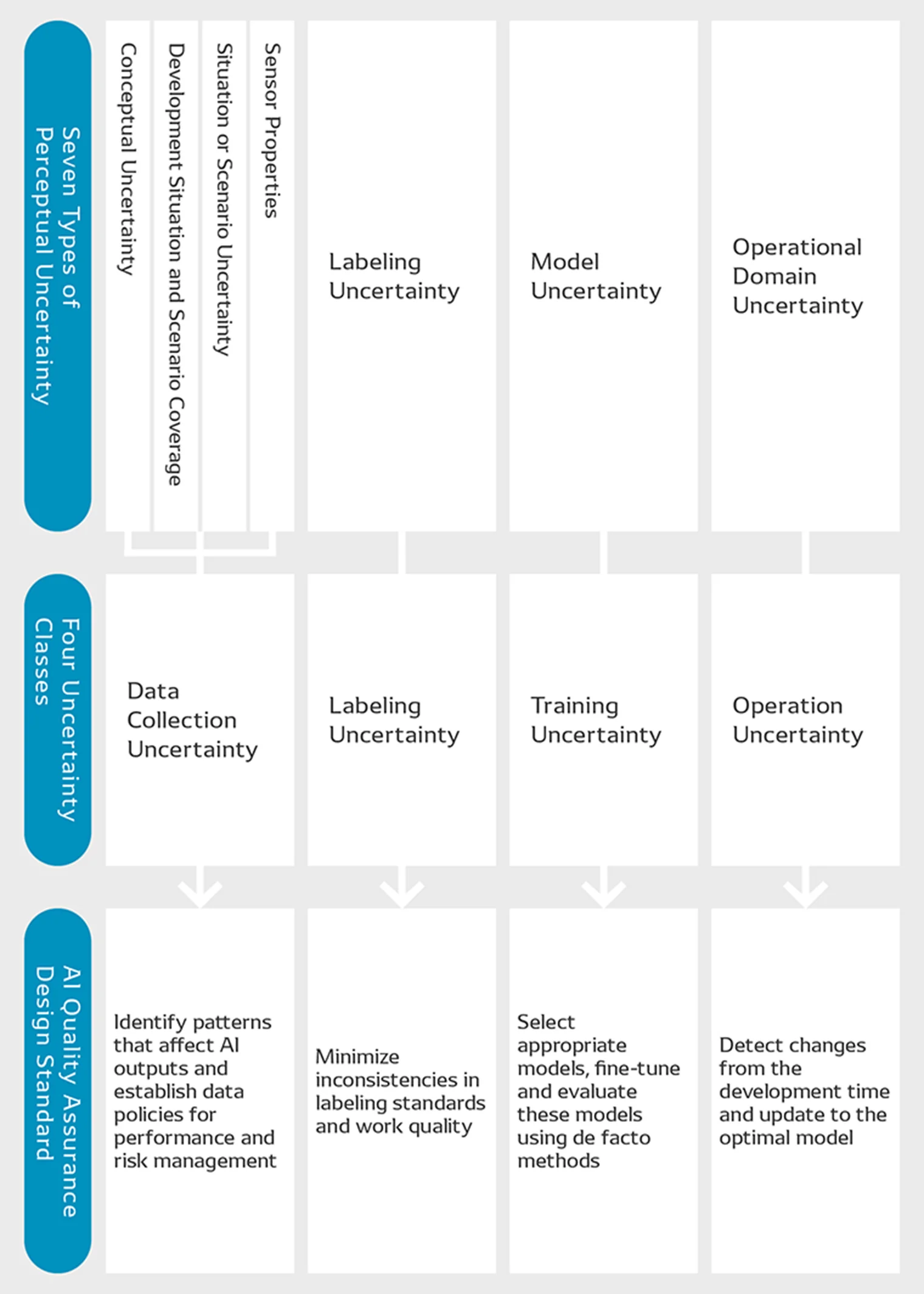

A referenced paper highlights Seven Types of Perceptual Uncertainty in Machine Learning Models as key factors for ensuring AI quality e.g., in pedestrian recognition.

These uncertainties include patterns such as recognizing pedestrians pushing bicycles or wearing costumes, assigning correct class labels when objects like guardrails obscure part of a pedestrian, choosing the appropriate machine learning model and evaluating its training results, and addressing newly encountered pedestrian patterns during system operation. All of these are potential uncertainties that can arise during the development of systems using supervised learning.

These uncertainties can manifest as what is termed the Four Uncertainty Classes in AI Development Process: Data Collection Uncertainty, Labeling Uncertainty, Training Uncertainty, and Operation Uncertainty. DENSO's design standard for AI quality assurance aims to mitigate each uncertainty class.”

AI Tool and Technique Development

Next, we focus on the AI tool and technique development. Without clear processes to define, identify, and address AI-related risks, appropriate countermeasures cannot be taken.

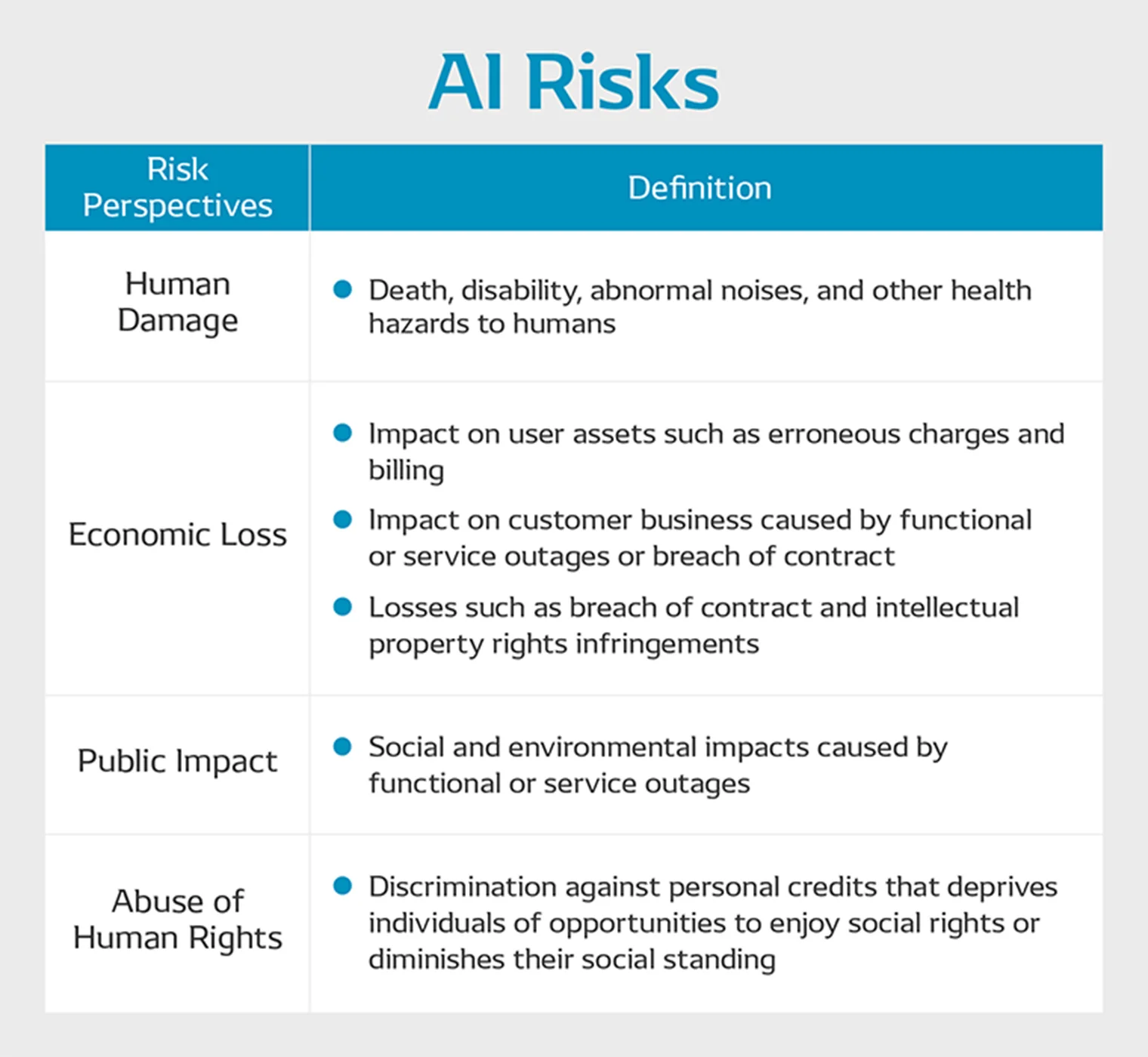

DENSO uses data from the AI Incident Database (AIID), managed by the Partnership on AI, which catalogs hundreds of AI-related incidents reported worldwide since 2014. Based on this data, DENSO has identified four types of AI risks.

With these four types of AI risks identified, Nakagami emphasizes the importance of being flexible and responsive to new risks that may arise as AI technology continues to evolve.

"These four types of risks are not exhaustive. There may be incidents, such as those related to copyright issues infringed by generative AI models, that are not yet listed in the AIID. It's crucial to continuously gather information on accidents and incidents and update our AI risk definitions accordingly.”

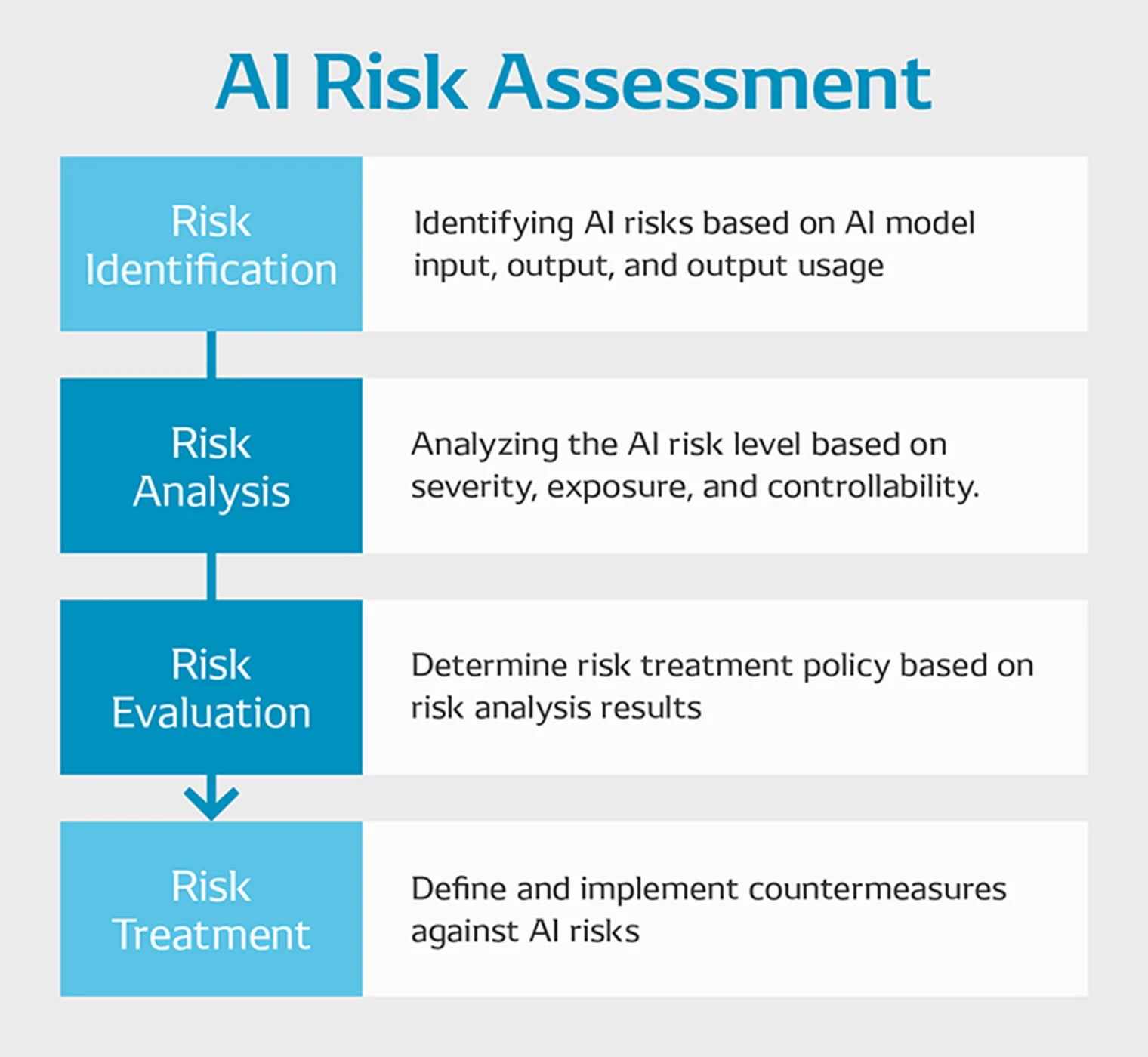

Building on these risk definitions, DENSO has developed an AI-specific risk assessment methodology aligned with the international risk management standard ISO 31000 (Risk management). This methodology encompasses four steps: risk identification, risk analysis, risk evaluation, and risk treatment.

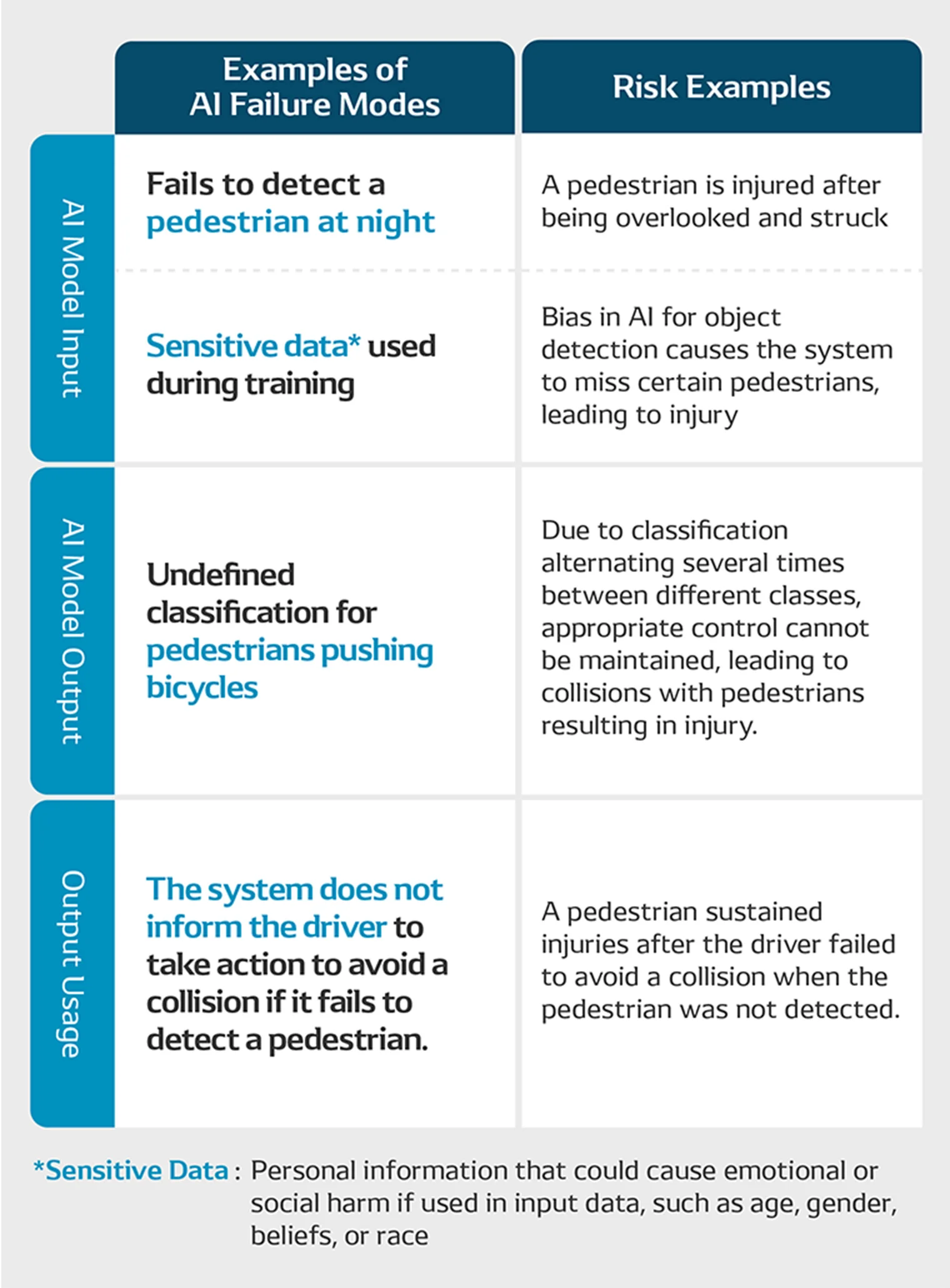

For example, when identifying risks, DENSO classifies AI-specific focus areas into three categories: AI model input, output, and output usage. Below is an example of identifying risks based on AI-specific focus points for an autonomous driving system equipped with AI-based pedestrian detection functionality.

AI Quality Assurance Support and Talent Development

Each business unit at DENSO is implementing the company's AI quality assurance regulations and risk assessment methodologies. These are being applied across a wide array of products and services, including advanced safety and autonomous driving systems, agricultural harvesting robots, and AI-based driving diagnostic systems.

To facilitate this, a company-wide organization has developed checklists for evaluating AI processes. This includes system design checklists and AI software testing checklists, ensuring that the AI quality management regulations are accessible and practical for use in development environments. Naturally, any changes or updates to international laws and standards are reflected in these checklists.

Additionally, DENSO has already established an educational framework to deepen understanding of AI and expand the pool of associates capable of actively applying AI to their work. AI-related training content is offered at various levels, targeting everyone from product development team members to new hires and administrative staff.

By thoroughly executing these three pillars of activity, we can uphold accountability in managing AI quality risks. To effectively implement an AI quality assurance system company-wide, it’s essential to gather associates with a broad range of expertise and experience. This cross-functional organization will monitor global and industry standards and provide development support to each business unit. Moving forward, we will continue strengthening this company-wide initiative in collaboration with the Quality Control Division and business units to drive AI Quality Assurance.

Promoting AI Quality Assurance for Society

DENSO's AI quality assurance efforts are not limited to internal activities. We aim to contribute to ongoing rulemaking related to AI quality by participating in various external committees.

For example, internationally, DENSO participates in the AI subcommittee of the ISO/IEC Joint Technical Committee (ISO/IEC JTC 1/SC 42) and the working group on AI safety in automotive applications (ISO/TC 22/SC 32/WG 14). Domestically, DENSO is involved in the Japanese Society for Quality Control’s Study Group on AI Quality Agile Governance and the AIST’s Committee for Machine Learning Quality Management (MLQM committee). Furthermore, DENSO actively promotes AI quality management by serving as an instructor for companies looking to integrate AI into their operations.

“The international rules governing AI quality assurance and safety are currently being shaped. By participating in these committees, we aim not only to gather the latest insights but also to contribute our own expertise to the global rule-making process. This, we believe, is one way we can give back to society,” says Kuwajima.

The landscape surrounding AI development is constantly evolving, and we recognize that the frameworks for evaluating risk and ensuring quality must adapt in tandem. By fostering close collaboration between its cross-company organization and individual business units, DENSO remains committed to advancing its product development efforts.

“Since our founding, DENSO has prioritized quality and safety in manufacturing as a means of addressing societal challenges. By operating an AI quality assurance framework, we aim to fulfill our responsibility in managing AI quality risks while supporting the innovations that AI brings to various products,” Kuwajima concludes.

Changing your "Cant's" into "Cans"

Where Knowledge and People Gather.