Did you find this article helpful for what you want to achieve, learn, or to expand your possibilities? Share your feelings with our editorial team.

May 20, 2022

TECH & DESIGNAccelerating automotive development by combining real-life and virtual-environment approaches (part one)

Creating teaching data for AI deep learning in virtual environments

-

AD&ADAS Systems Engineering Div.Takahiro Koguchi

Takahiro Koguchi has built up experience in games and programming since he was a young child, and went on to study quantum mechanics, chaos simulations and other such fields at university. Koguchi joined a video game maker after graduation and worked there for 15 years before switching to DENSO. He has made use of R&D skills in the area of virtual environments from his previous job, to develop driver assistance systems using sophisticated techniques.

The latest video game technologies enable the creation of visuals that are so impressive they can easily be mistaken for real-life, filmed footage. They also enable more accurate physical simulations. DENSO Corporation is searching for ways to apply these game development technologies to vehicle-related system development.

In part one of this two-part article, we take a look at driver assistance system simulations utilizing virtual environments with highly realistic situation and parameter re-creations, with the aim of greatly improving the efficiency of the massive numbers of tests that must be carried out during vehicle-related system development.

Contents of this article

Utilizing cutting-edge game development technologies in vehicle-related system development

It’s my understanding that DENSO uses video game development technologies to develop driving support systems. It seems unusual that a company like DENSO, which is so deeply involved in creating vehicle-related systems, would use game-building technologies.

Takahiro Koguchi:I was actually involved in developing video game consoles at my previous job. My work involved graphic processing unit (GPU) planning as well as R&D related to CG and image processing technologies, and along the way I developed an interest in finding ways to use CG-related technologies in real-life applications. Driving support systems had finally begun to catch on throughout society, so I wanted to find ways to apply game-related techniques and technologies in this field. That’s why I joined DENSO in 2017.

Now, by combining various technologies related to CG, GPUs and the like, I create virtual environments which are used for developing advanced driver assistance systems (ADAS) and the like.

I’ve heard that car-racing game developers often have a personal interest in automobiles. Is that also the case with you, Mr. Koguchi?

Koguchi: Well, I have always enjoyed the outdoors, like visiting the beach and the mountains, and I enjoy driving out there myself. But I’ve never had the chance to buy a racing car or compete on any circuit [laughs], but I did pass DENSO’s in-house license test for test-drivers, which I took in order to develop a better understanding of the fundamentals of vehicle actions and motions.

I spent about two months practicing for the license test. During that period, I experienced high G forces of around 0.5 to 0.7, and one time I nearly flipped the car over while taking a banked turn at high speed! My instructor did a very thorough job of teaching me about the mechanisms and movements of vehicles, which gave me a much better understanding of how cars work.

That’s really impressive!

Koguchi: Through this experience, I realized the importance of driving actual vehicles in real life, rather than just using virtual-environment-based CG for R&D operations. Studying and practicing for the license test vastly increased my understanding of driving technologies.

How did you originally plan to utilize your game-development knowledge in vehicle system development?

Koguchi: I figured I could apply that knowledge to solve problems in the area of ADAS development. When designing parking assist and automation features, for example, the vehicle’s system must accurately identify the correct parking location, and stop the vehicle if a dangerous situation arises. Massive amounts of data on parking environments must be learned by the AI, and system verification testing carried out, in order to successfully develop these types of detection and recognition functions. In fact, with every new function that’s added and new vehicle model that’s developed, even more data must be incorporated.

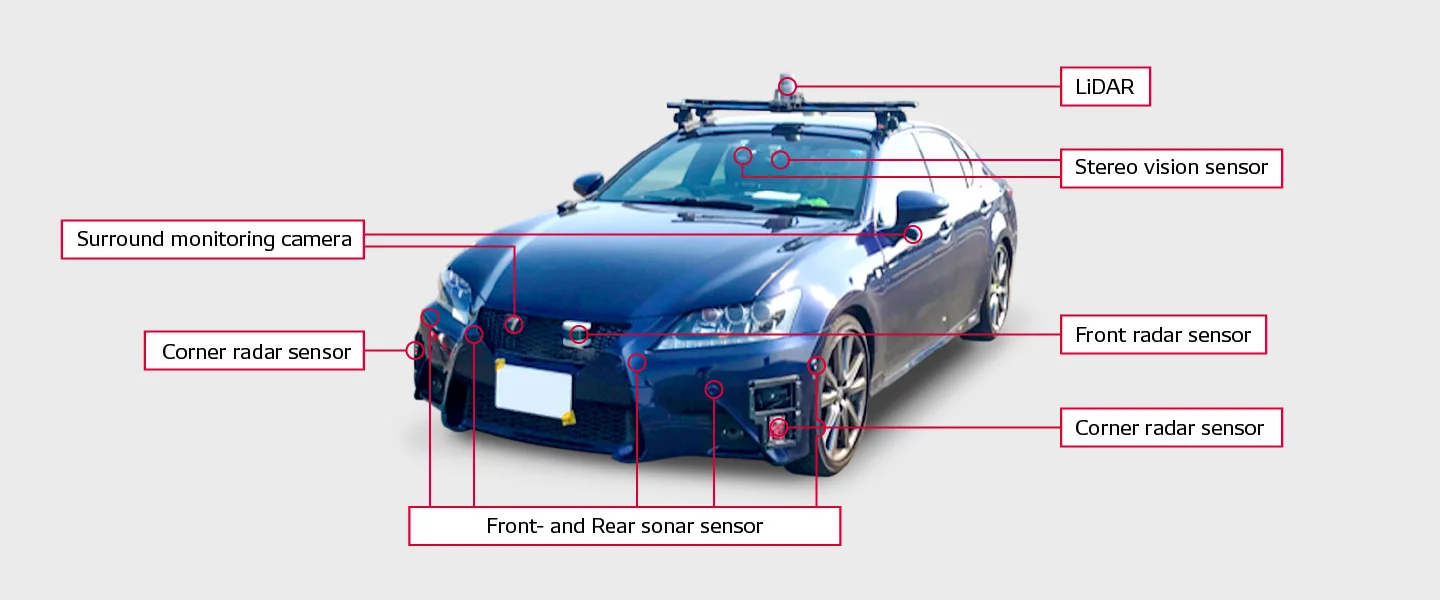

This data is collected via onboard sensors in vehicles, and this collection process involves a number of challenges. To guarantee system safety, a lot of time is required to collect the necessary data from various sensors, but by using game development technologies we can create realistic environments in real time, and then use these to simulate interactions between humans and machines. Furthermore, this approach reduces the amount of time and effort required to achieve accurate measurements compared with approaches that use actual, physical vehicles—simulations make it much easier to gather the necessary data.

In short, we believe that the virtual environments often seen in game development can enable data to be collected much more efficiently during ADAS development.

You use the Unreal Engine developed by Epic Games to build virtual environments, don’t you?

Koguchi: Yes. We chose a game engine that includes a range of useful functions in order to better optimize our development operations.

The Unreal Engine is outstanding because it can rapidly render high-quality virtual environments based on asset data. Furthermore, its source code is publicly available, there are a number of plugins available which expand functionality, and its RHI—or Render Hardware Interface—enables command-based analyses and optimizations. From an engineer’s standpoint, it’s a very attractive platform.

Improving efficiency for simulation-based development using virtual environments

How do you use the Unreal Engine in ADAS development?

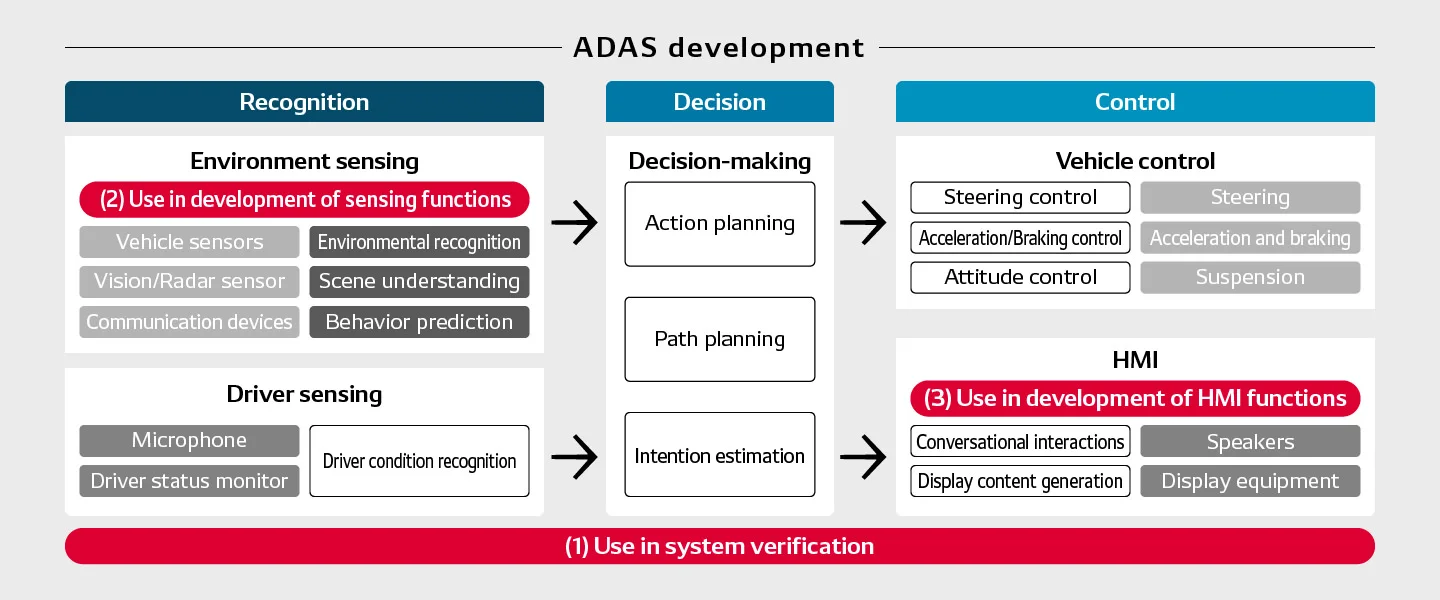

Koguchi: For our current project, we use the engine in three main ways. The first is system verification. By carrying out verification tests using virtual environments in addition to real-life environments, we are able to reduce the time and effort required for verifying and evaluating actual-vehicle systems.

Our second usage of the engine is for developing sensing functions. We utilize virtual environments to more efficiently handle everything from deep learning to system verification as part of developing ADAS sensing-function algorithms.

Finally, our third usage is for developing the human–machine interface (HMI) functions. One example is developing the user interface used in our parking assist system.

What kinds of equipment do you use in R&D?

Koguchi: We run the Unreal Engine on a personal computer with a high-performance GPU. The ability to carry out development without needing specialized equipment is one of the biggest advantages of a game engine.

In regard to the first application you mentioned—system verification—do you essentially create a high-end car simulation game for use in virtual testing?

Koguchi: Basically, although our goals are different than in game development. We want to reliably confirm which parts of the vehicles move in which directions and at which velocities during operation. In other words, the virtual environment is designed for re-creating actual conditions and carrying out system verification tests.

Currently, how do you go about carrying out real-life, non-virtual technology evaluation tests?

Koguchi: By using test vehicles equipped with multiple sensors on various public roads and the DENSO test courses, we gather the data necessary for evaluating system quality. This involves meticulous verification of travel, turning and stopping performance in the actual vehicle. Performance testing facilities in the test course include steep inclines, the sudden appearance of pedestrians in the street, nighttime and rainy-weather driving, and other such events and conditions meant to simulate real-life driving situations.

On top of these tests, we use virtual environments to acquire comprehensive verification test data for circumstances which are difficult to re-create through testing on public roads and the test course, and then confirm the results of virtual-environment tests using actual vehicles. This enables us to integrate the required logic into the vehicle’s system and ensure safe, trustworthy driving operations.

We believe that virtual testing reduces the time spent on repeating operations intended to identify potential problems, which reduces the development time overall.

It seems like the physical tests are quite complex. How are you able to re-create these tests and conditions in a virtual environment?

Koguchi: External-environment models, sensor simulations using sensor models, and vehicle movement models are required to conduct virtual-environment-based technology evaluation tests. In video games, external-environment models are the virtual environments in which your character or vehicle moves around. For our tests, we create environment models for downtown areas, parking lots, residential neighborhoods and the like, as well as object models for things like poles and traffic signals. Then, by adding in the factors of time and weather changes, we can produce a wide range of environmental variations. Working together with the game development studio ORENDA, we used this approach to create a parking lot environment on the Unreal Engine.

You also mentioned sensor simulations. Does this refer to the creation, in a virtual environment, of sensor-equipped vehicles that resemble actual vehicles?

Koguchi: With sensor simulations, various virtual sensor models can be swapped in as needed in order to verify and evaluate sensor performance for the virtual test vehicle. Testing with a real-time camera usually requires photographing at the rate of at least 30 shots per second even if image quality must be lowered in order to achieve this. In contrast, when carrying out virtual-based sensor performance verification and assessment tests where real-time measurement is not required, virtual environments enable high-precision simulations with various complex factors added in.

As for vehicle movement modeling, we customize vehicle models through the Unreal Engine, creating situations where automated parking is carried out at slow speeds and simulating center-of-gravity and suspension performance factors therein.

It seems that sensor simulation might prove difficult to use in certain types of testing.

Koguchi: Conditions vary based on the type of function we’re trying to develop. Take measurements via sonar-based sensors, for example: Sonar detects convex and concave variations on surfaces based on how emitted sound waves are reflected back to the sensor. Engineers in charge of developing sonar sensors often request simulation conditions with very high precision for their test operations. However, some say that the multiple sensors work as a whole in order to guarantee the safety of the entire system, so such attention to fine details isn’t necessary. Each department has its own requirements, and we make fine adjustments to accommodate them.

Comparing and tying together real-life and virtual testing

How do you actually conduct the technological assessment tests using these virtual environments? Do you use a setup like in a driving game at a video arcade—a driver’s seat with a steering wheel, and perhaps a virtual reality headset?

Koguchi: We have tried that kind of approach, but we’re now aiming to ensure that virtual-environment tests provide the same quality of driving data that we would get from real-life tests in an actual vehicle. This entails looking at various vehicles operating within the virtual environment and checking whether the test vehicle follows the same course as its real-life counterpart, and also checking whether the same type of automated braking performance is achieved when a pedestrian suddenly enters the roadway. These are just some of the things we need to verify.

How do you compare and tie together the results of real-life tests and virtual-environment tests?

Koguchi: It is not realistic to aim for perfectly matched conditions in both types of testing; we cannot produce the exact same results with both methods. Instead, we have referred to the ongoing work of Professor Stefan-Alexander Schneider from Germany’s Kempten University of Applied Sciences. He’s quantitatively evaluating the disparities between real-life and virtual environments.

We usually use a recognition device,* inputting visual image data from both real and virtual environments and utilizing the resulting quantitative evaluations of the differences. For AI detection of humans, vehicles and various objects, measures such as IoU (Intersection over Union) are used, and based on these we compare virtual and real detection probabilities, as percentages, for successful detection of other vehicles by the AI.

Moreover, when using a vehicle’s own-position estimation technologies for automated parking, even if the results for feature parameters during the estimation process don’t match up exactly between the actual and virtual environments, as long as the final estimation results are accurate we won’t have a problem. With this type of usage, even if time-sequenced data doesn’t match exactly between the two, we can still assess disparity amounts on the macro level. No matter how one approaches this issue—in other words, no matter how much money you spend in pursuit of greater accuracy—some degree of disparity is unavoidable.

Regarding our efforts to compare all visual images taken in virtual operations with those from real-life operations, we have not yet achieved our goals in terms of efficient data creation. Therefore, we are searching for ways to better identify and assess disparities, as well as ways of using virtual simulation technology. We believe it is important to create definitions for models and increase the number of real-versus-virtual comparison examples available as references.

* A device and its software, used in deep learning and other machine learning operations, that facilitate learning of determination criteria for categorization, recognition, etc.

Because virtual environments can simulate weather conditions and similar factors, how do you compare these with the results of actual-vehicle testing?

Koguchi: The DENSO test course includes a facility that simulates rainy conditions, and we use this to create realistic rainy-weather situations during testing. However, because there are a nearly infinite number of possible combinations between scenarios and target functions in these tests, we have to look at available scenarios, and narrow down and choose the ones which best suit our purposes.

In contrast to game development, automotive development must take into consideration the lives of human beings. That’s why we are conducting trial and error in the area of virtual-environment ADAS development that utilizes both real- and virtual-environment approaches, and searching for useful technology applications. We’re rethinking the way in which we will carry out ADAS development moving forward.

COMMENT

Changing your "Cant's" into "Cans"

Where Knowledge and People Gather.