Did you find this article helpful for what you want to achieve, learn, or to expand your possibilities? Share your feelings with our editorial team.

Aug 26, 2021

TECH & DESIGNNew horizons in agriculture through AI-based robotic “eyes”

Development of the automated tomato-harvesting robot

-

Production Engineering DivisionTakaomi Hasegawa

Takaomi Hasegawa joined DENSO in 2006. He majored in intelligent robot studies at university, and after joining the company was assigned to car navigation system circuit design in the ICT Engineering Division. Hasegawa responded to an in-house advertisement for a position in the Robotics Systems Development Department and was assigned there in 2014, where he developed agricultural robot software and AI image recognition technology. He currently works for the Production Engineering Division.

As society ages, labor shortages and other problems are create increasingly difficult circumstances in the agricultural industry, and many people are looking to robots for solutions. DENSO is working together with the Mie Prefecture-based agricultural facility Asai Nursery to develop a tomato-picking robot. Let’s take a look at how image recognition technology has been developed to create robotic “eyes” for agricultural robots.

Contents of this article

Establishing a development laboratory in an unused part of a DENSO plant

— I was told that you started out developing car navigation system hardware here at DENSO. That’s quite different from agricultural robot software, which is your current assignment. What inspired you to make that switch?

Hasegawa: I studied robotic intelligence at university, but after getting hired at DENSO I was assigned to circuit design of CPU boards for car navigation systems. That work was really interesting and enjoyable, but I felt a disconnect between the products I made and their end users, which began to bother me. Once I had finished a product, I immediately moved on to developing another one, and so never had a chance to get any direct feedback from customers on the previous product.

Furthermore, I began to get a sense that products in the future would involve combined development of hardware and software rather than separate development of each, and around that time DENSO posted an in-house ad for a position in a project to make robots that would be useful to people. I realized this was an opportunity to put my university education to use, so I applied.

— Had DENSO already decided to have project members develop agricultural robots?

Hasegawa: Yes. At that point, DENSO had only made a general decision to pursue joint research with a university and develop a tomato-harvesting robot. One of the higher-ups in the Company had already joined the project, and I was assigned as the sole software developer.

We started by talking with farmers from all around Japan and even tried doing some agricultural work ourselves, which enabled project team members to personally experience and understand agricultural tasks and operations. That helped us discover which parts of harvesting were the hardest.

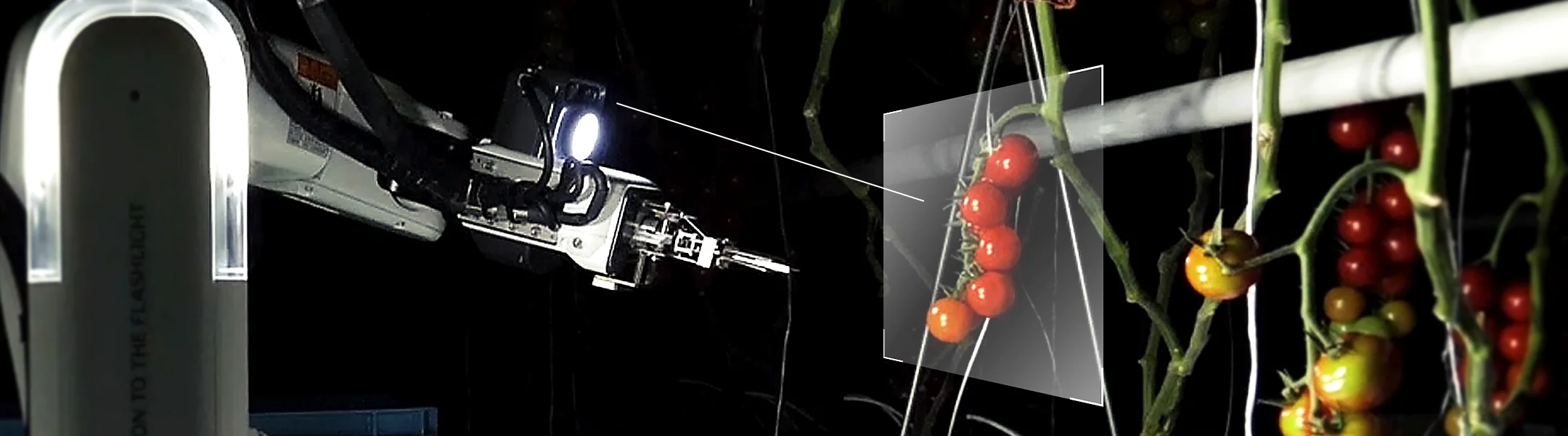

After this, we asked management to let us use an open, unused space in a head office plant as our laboratory. Here we made imitation tomatoes by hand, hung them up, and conducted tests to see if our robots were able to pick them. We repeated these tests and refined the software each time.

Joint robot development with an agricultural nursery

— Once you had a clearer picture of how things should work, did you start carrying out tests onsite at the nursery?

Hasegawa: Yes, we did. However, testing inside the laboratory and testing at an actual agricultural facility proved to be very different, and this really taught us the value of our partnership with the nursery staff.

If you’ve ever used one of those robotic vacuum cleaners, you know that it’s necessary to tidy up the room before activating it, otherwise it won’t be able to reach all parts of the floor. In a similar way, our agricultural robot not only required performance improvements, but also an operating environment where it could move easily in order to effectively harvest tomatoes.

So, we needed to work closely with an agricultural facility. We found a nursery with the techniques and skills required to keep tomato plants at specified heights and orientations, convinced them of the necessity of arranging the growing environment to help the robot operate effectively, and persuaded them to become a joint development partner. This new partner was Asai Nursery in Mie Prefecture, and together we developed the automated tomato-picking robot. We founded the joint ventureAgriD Inc.for this project and proceeded to test the robot using four hectares of greenhouse space.

— So you sought solutions to real-world problems together with the nursery?

Hasegawa: We built the testing environment together with the nursery staff. From the outset, we confirmed the scope of management operations that Asai Nursery could handle and determined what we hoped to achieve with the robot, made sure everyone understood these points, and defined in quantifiable terms and ensured agreement on several important factors such as plant height and tomato orientation.

We demonstrated our robots to the nursery staff from the prototype phase onward. This was a little embarrassing because the early prototypes were so primitive, but we did this so that they would understand the limitations of what a robot could do. We were able to explain various things during these demonstrations—for example, how the robot arm could not pick tomatoes on the rear side of the plant—and asked for their help with such issues.

For harvesting of cherry tomatoes, we used a “high wire” approach which involves installing wires along the top of the greenhouse and attaching guide strings connecting these and the plants below in order to make sure the main stems stand up straight. Clips were used to adjust the orientation of each stem. These were new technologies introduced at the nursery for this project. Quickly completing prototypes in the early stages and achieving consensus among all project members and everyone else was extremely important.

Repeated onsite tests to realize usable, software-based image recognition

— Can you explain the software you use for robot development?

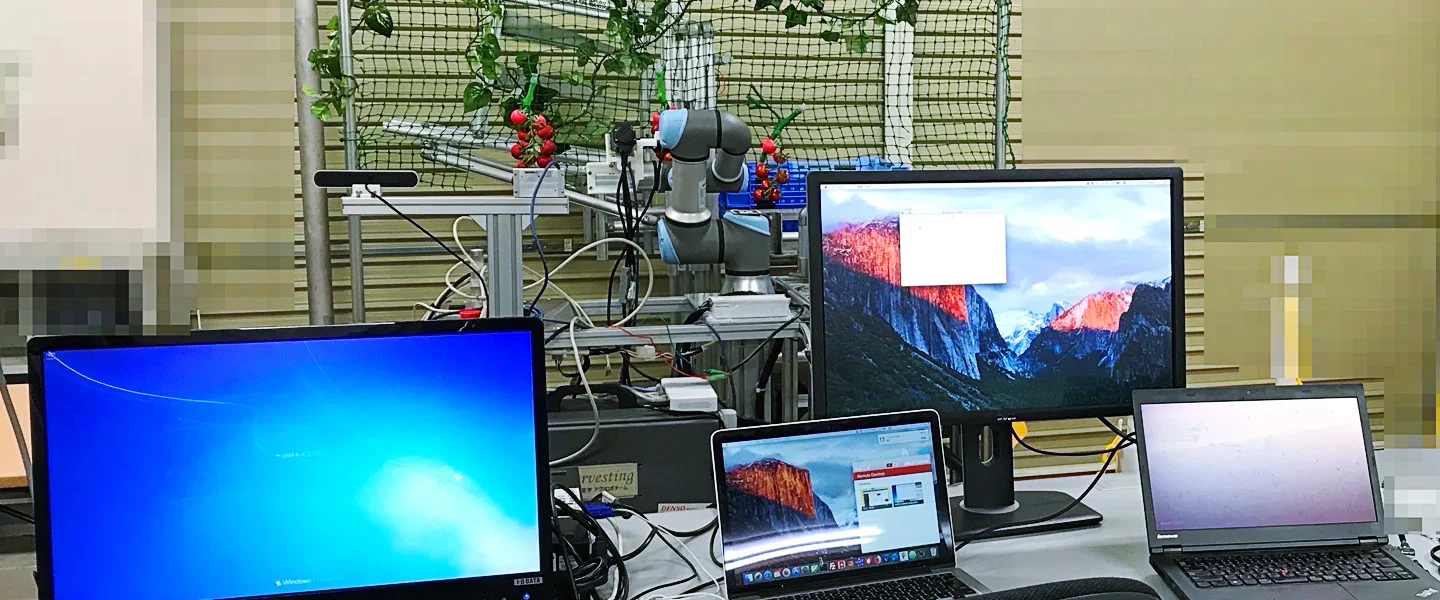

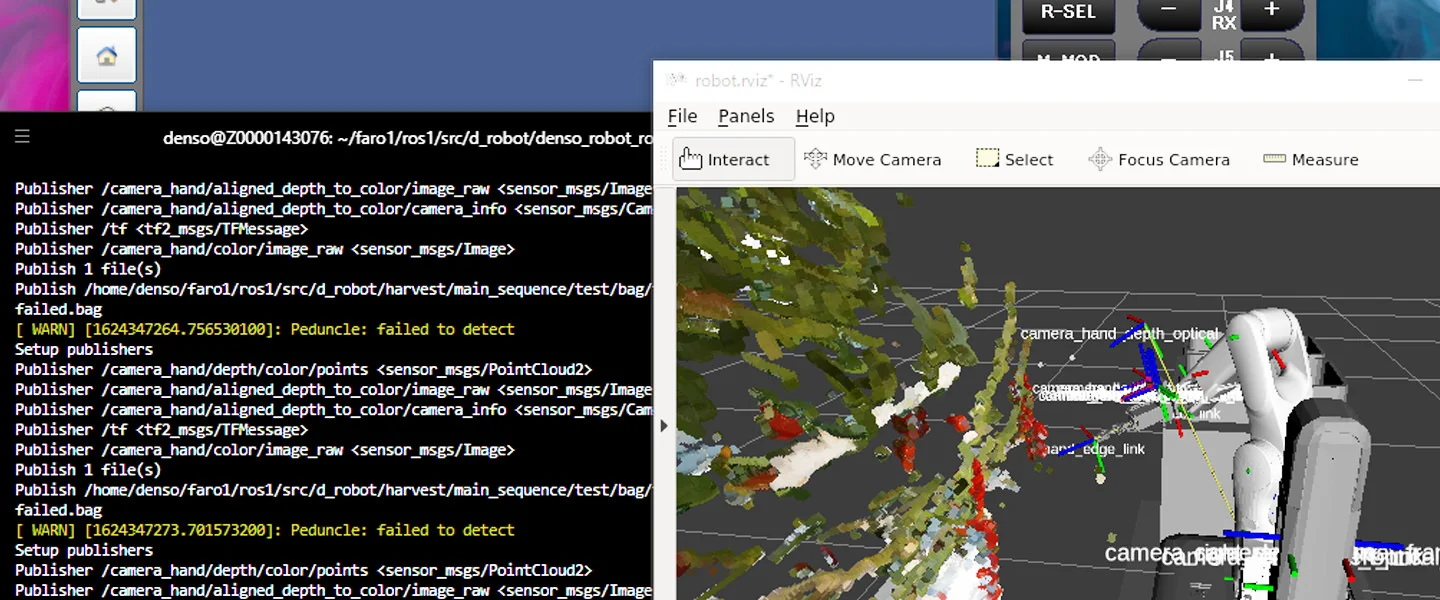

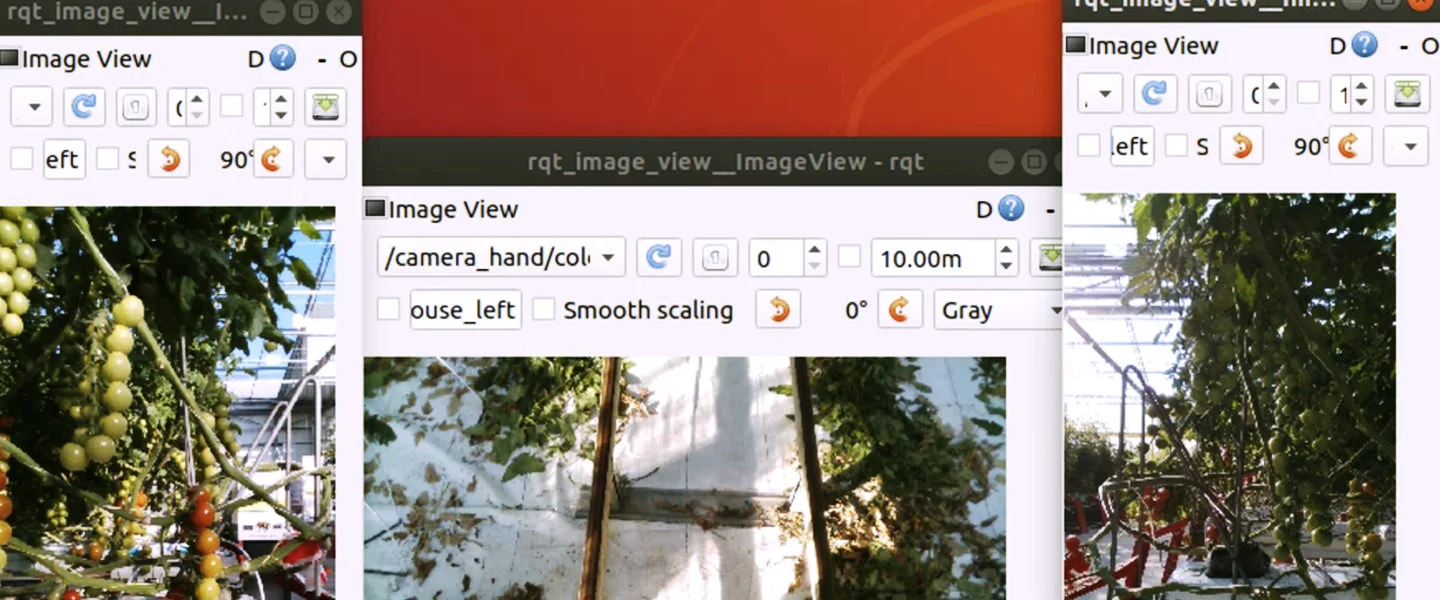

Hasegawa: The robot uses a DENSO WAVE robot arm (VS-060) and an industrial-use computer (IoT Data Server). We use Linux (Ubuntu) as the OS for the IoT data server as well as commonly used robot-development middleware called Robot Operating System (ROS). C++ and Python are our primary programming languages, and we use multiple AI frameworks including TensorFlow and PyTorch.

As for robot software development, we use physical robot units along with a DENSO WAVE integrated development environment (WINCAPS III) and an emulator (VRC). Using these along with agricultural facility data we collect, we can simulate agricultural production environments on the computer.

— Which aspects of the robot development process were the most challenging?

Hasegawa: We had real trouble improving the precision of our image recognition technology. There were two major problems when harvesting cherry tomatoes.

The first was accurate visual recognition of tomatoes with irregular shapes. In industrial applications, the shapes of the target items or workpieces are predetermined and consistent, so image recognition is straightforward. But with agricultural produce, we found that cherry tomatoes all had different shapes, and there were other factors such as amount of sunlight.

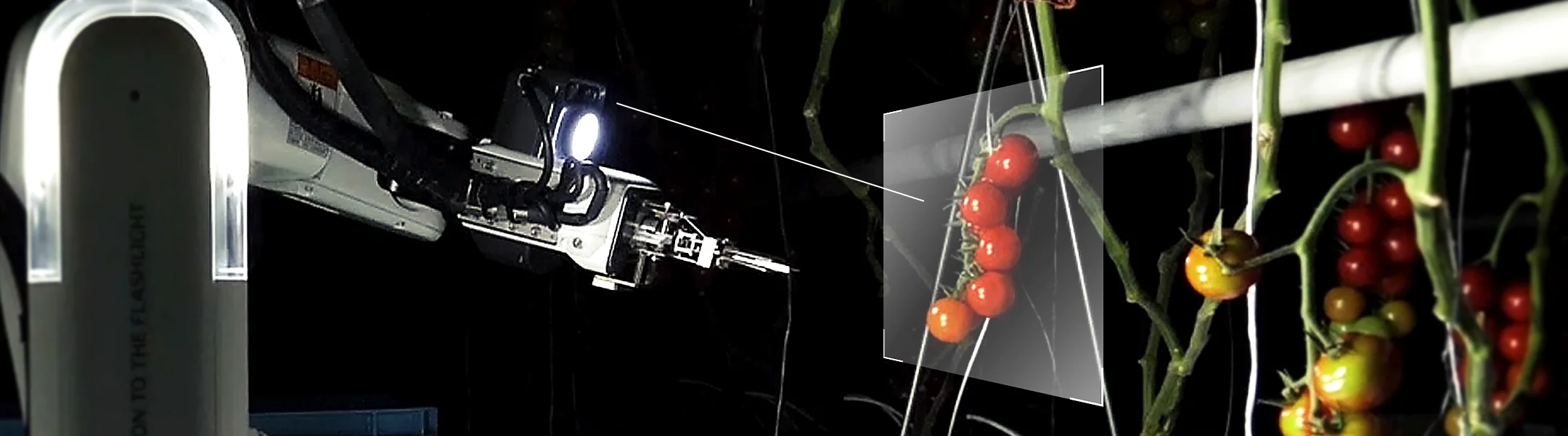

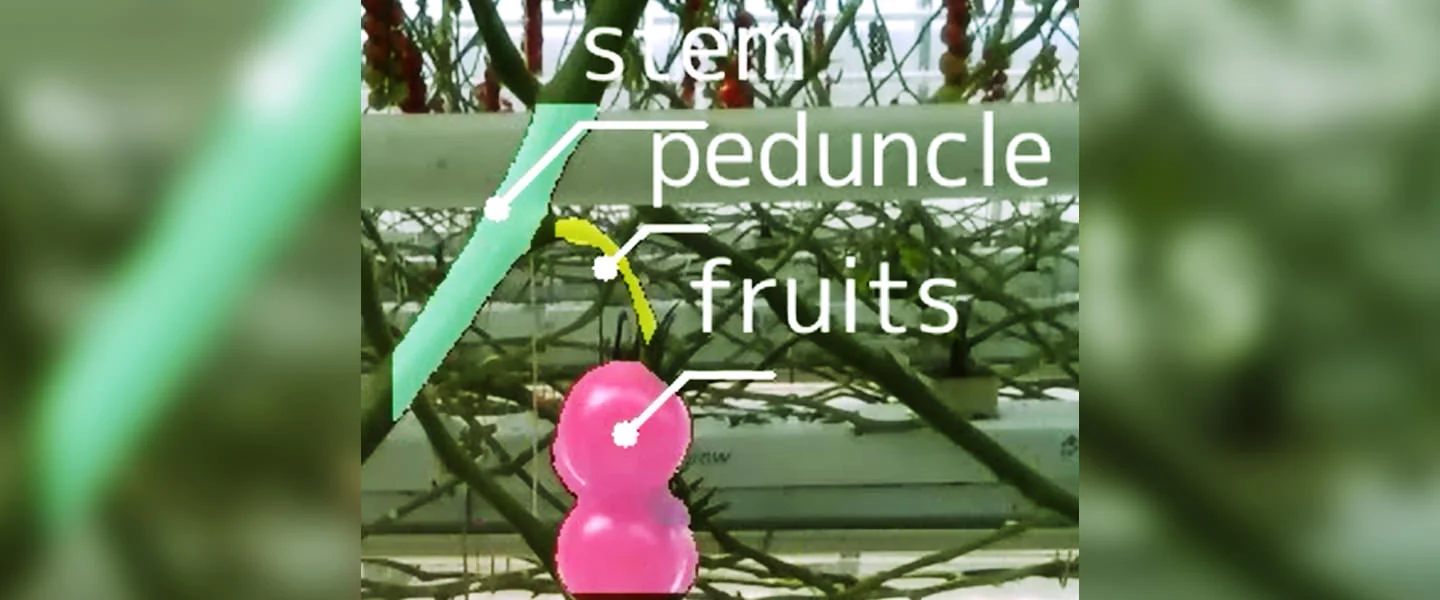

The second major problem was detecting fruit peduncles (narrow stalks to which the fruit are attached): Bunches of cherry tomatoes hang from fine peduncles, and the robot must cut each peduncle in order to collect the fruit. However, because each of these peduncles is only about two or three millimeters thick, they can be hard to see when lit by sunlight, which inhibits proper detection.

— How did you solve these problems?

Hasegawa: AI was the key; we used deep learning technology. The robot is designed to detect obstacles, detect bunches of cherry tomatoes, determine ripeness, and detect tomato peduncles. These functions utilize image recognition based on numerous unique AI models. We read a lot of academic papers on the subject and equipped the robot with cutting-edge AI models, then carried out assessment and comparison tests.

For detecting obstacles and determining crop ripeness, we use a convolutional neural network (CNN) as the foundation and image recognition AI models, whereas for detecting bunches and individual fruit positions we use object detection AI models. In order to detect peduncles, we use an image recognition technology called semantic segmentation to carry out precise detection on a pixel-by-pixel basis. Successful detection of these tomato peduncles when they are exposed to bright sunlight, which presents a major challenge, was made possible by using the technologies just described as well as a proprietary, complementary algorithm designed together with DENSO’s Measurement & Instrumentation Engineering Division.

However, we found that simply applying AI models did not achieve the desired precision in actual usage at the nursery. With deep learning, model learning relies heavily on parameters known as hyperparameters, which greatly affect model performance. We had to adjust these hyperparameters by trial and error, learning as we went.

— How did you discover better AI models?

Hasegawa: I’m not an expert in developing AI models, so I asked for help from other members of the project. You may have heard ofKaggle,which is a well-known competition platform in deep learning and other machine learning fields; we held a similar competition within the project team. This entailed teaching the basics of deep learning to members who had no experience in the field, and then holding competitions to test their skills, then using the most precise models in our robot. Although official Kaggle competitions award prize money to the winning teams, unfortunately we could not pay our competition winners!

To tell the truth, because I had the most experience in deep learning, I had expected to win the competition. However, I ended up losing in spectacular fashion the first time around. When approaching this type of competition with textbook-style knowledge, one tends to go for greater numbers of parameters in order to get the best performance. However, some of my fellow members took a different route, following their intuition instead and using fewer parameters to achieve better performance.

This puzzled me at first, so after the competition I read various research papers to learn more about the matter, and it turns out that using fewer parameters produces better results in some cases. Standard approaches do not always produce the best results, and some fine tuning is required when working in agriculture. This has made the whole process very interesting for me: simply having lots of knowledge is not everything when building AI models; it’s also important to trust one’s intuition like an artist.

— I have heard that annotation, or labeling of data used in AI model learning, is an important part of deep learning. Did you handle annotation in any special way for this project?

Hasegawa: Our testing cycle entailed conducting daily robot tests, collecting the resulting data, annotating that data, improving the AI models, installing the robots, and then testing again. With peduncle detection in particular, we used semantic segmentation for pixel-level object detection, so annotation had to be carried out on a pixel-by-pixel basis in order to enable precise detection. This was highly labor-intensive, so I asked each of our roughly 20 project team members to try to complete 100 annotations per day for about one month.

Many organizations choose to outsource annotation work, but the fact is our annotation work is quite complicated. Different people tend to make different judgments regarding which parts to fill in even when labeling things like peduncles, so we have to establish clear and firm judgment standards. Even when operating based on relatively clear rules, there are still times when people stray from the standards. However, making major changes to the rules would mean doing everything all over again, so we struggled a lot with how to handle these exceptions. And yet we still had to redo the annotations about two times in order to improve precision. Because annotations are directly related to an AI model’s recognition precision, such measures are unavoidable, otherwise the end results will be bad.

In order to boost team morale, I share annotation progress rates along with image recognition precision information with members. Precise teaching improves AI performance, and by showing this clearly to members in visual form, I help them feel more personally involved with improving our AI. We try to approach each annotation with a feeling of gratitude, as it helps to foster and improve our AI. Therefore, we refer to this work as “annotations with gratitude”.

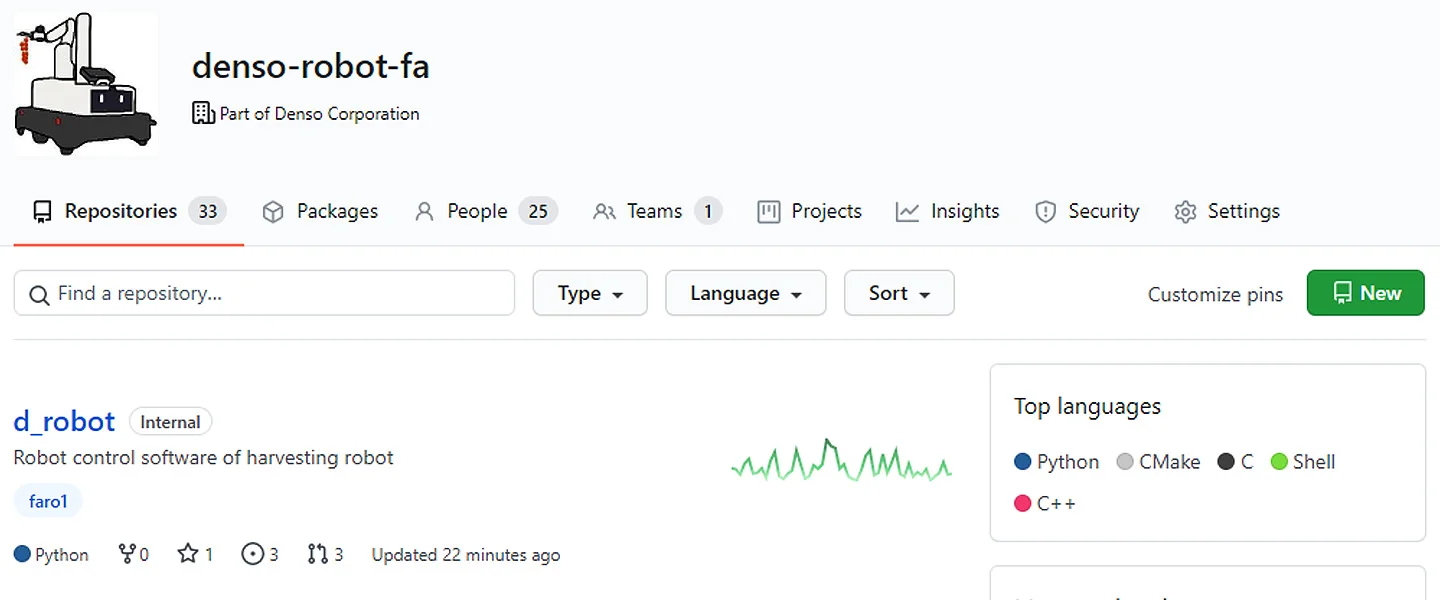

Completed annotation data as well as AI-related knowledge is collected using a wiki system known as Confluence, and in-development software is managed using Git, the de facto software-version management system for software developers. We use GitHub Enterprise as our Git hosting service for software development. By triggering pull requests (software update requests) on GitHub and running automated tests via GitHub Actions (a practice known collectively as continuous integration, or CI), we are able to automatically identify software problems. In other words, we run tests on our code before carrying out software reviews as part of the development process.

Regarding building the infrastructure required for this software development process, my DENSO coworker Hidehiko Kuramoto, a member of the ICT Engineering Division where I used to work, has given his full support. His infrastructure has proven indispensable for developing software efficiently.

— What is the current state of your robot’s harvesting capabilities?

Hasegawa: In terms of AI-model image recognition, we have boosted obstacle detection precision from 65 percent initially to 98 percent currently, reduced tomato bunch detection time from 3 seconds to 1 second, brought down the margin of error for ripeness determinations from class 2 to class 1, and greatly increased peduncle detection precision from 50 percent to 92 percent.

As a result, we have seen much higher successful harvesting rates. Each row of cherry tomatoes inside the greenhouses is about 80 meters long, and when things go well, we are able to harvest 90 percent or more of the crops on a row. However, there is some inconsistency when we encounter unforeseen circumstances caused by crop management, which can bring harvesting rates down to around 60 percent.

Although we are still debating how to best quantify and present information on picking speeds, under ideal conditions we are currently able to harvest one bunch in about 20 seconds.

Serving farmers around the world

— How do you plan to expand your agricultural robot development efforts in the future?

Hasegawa: We have greatly improved harvesting rates for the robot, and now we want to start improving usability for practical purposes. One way to improve usability would be to promptly give up harvesting tomatoes that are difficult to pick, as this would decrease overall harvesting times. Currently, we’re carrying out monitoring tests at farmers’ locations.

The robot is designed to pick cherry tomatoes at the moment, but we think there is high demand for picking medium and large tomatoes globally, so we want to expand applicability to these crops as well. Aside from crop harvesting, many farmers would be happy to have robots that could handle other operations as well, such as removing leaves.

— Do you plan to apply this technology overseas?

Hasegawa: We have been contacted by numerous overseas organizations about our technologies.In April 2020, DENSO invested in Certhon Group, a Dutch greenhouse provider, and is now working jointly with them.Although we haven’t been able to send staff there in person due to the Covid-19 pandemic, we have been logging in to their system remotely and providing support as Certhon begins their robot tests.

— If you use these technologies to harvest crops other than tomatoes, will you need to modify the AI models as well?

Hasegawa: Our current AI models are effective for image recognition on medium-sized tomatoes. With large tomatoes, however, we have to pick them one at a time, which requires more sophisticated robot control in addition to image recognition. This makes things much more challenging.

We have also received requests for robots that can harvest grapes, cabbages and other crops, which would require new AI learning. However, for image recognition of cabbages, we were able to use our cherry tomato AI models along with knowledge gained thus far to complete teaching and achieve excellent results in a short time. This was accomplished in about half a year by Hayato Ishikawa, a new member at DENSO who had no AI-related knowledge or experience as of last year.

By starting with just the image recognition component, we can find ways to expand our business.

— Which parts of robotics development are most interesting?

Hasegawa: Robotics development is sometimes called the “mixed martial arts” of cutting-edge technologies. Because it involves software as well as hardware control, it’s interesting technically and helps to develop one’s engineering skills.

With agricultural robot development, we have to carry out numerous tests in actual farm fields, which means enduring hot summer days and cold nights. Although this can be hard, we get to work closely with the end users, which is really rewarding. And, of course, we’re treated to innumerable tasty fruits and vegetables, so people who like to eat can enjoy this job!

— It seems like engineers in this field need a lot of knowledge and skills.

Hasegawa: Skills related to deep learning and other software-related fields are important, but this is only one aspect of how we deliver value to customers. Whether you’re a software engineer or a hardware engineer, it’s important to avoid limiting yourself to one area of expertise. You have to transcend those boundaries in order to develop outstanding robots.

When I applied for the opening in the Robotics Systems Development Department and was reassigned here, I was ready for some tough challenges because it was a career transition for me from hardware engineering to software engineering. And although it was even tougher than I had imagined, I have found those challenges to be the most interesting and enjoyable part.

I once heard about a vacuum cleaner maker that created 5,000 prototype units while designing a new product; with the robot, we have gone through about 100 failed prototypes, so there may be another 4,900 to go.

COMMENT

Changing your "Cant's" into "Cans"

Where Knowledge and People Gather.