To Achieve AI-based Fully Automated Driving—R&D Project on Elemental Technologies at DENSO

On June 9, 2020, DENSO Tech Links Tokyo #7, an event organized by DENSO Corporation, was held as a webinar. The theme was “Human Drivers and AI—Automated Driving from the Viewpoint of Human Characteristics.” DENSO employees who play a key role in advanced technology talked about the development of automated driving technology and research on AI, taking human characteristics into account, to realize new mobility. The next speaker was Naoki Ito, General Manager of the Applied AI R&I Dept. , AI R&I Div., Advanced Research and Innovation Center. He introduced an R&D project using tracking, free-viewpoint image synthesis, and DNN accelerator to achieve AI-based automated driving.

■Speakers

Naoki Ito

General Manager of the Applied AI R&I Dept., AI R&I Div., Advanced Research and Innovation Center

Four Automated Driving Levels and Future Mission

Naoki Ito: I will talk about DENSO’s initiatives in automated driving from the viewpoint of R&D on AI.

The theme of my presentation is DENSO’s initiatives on advanced driver assistance systems (ADAS) and automated driving (AD) systems. These will become a global trend in the future.

The vertical axis shows the target vehicles, including passenger cars or so-called “privately owned vehicles,” commercial vehicles such as trucks, and shared and service cars including taxis and small buses. The horizontal axis shows the AD/ADAS levels. The level increases from left to right.

Automated driving is categorized into four levels (Level 1 to 4). Active safety, which is shown on the left, and about half of ADAS/AD functions fall under Level 1 or 2. On Level 1 and 2, the driver is basically responsible for driving, and the system supports the driver. On Level 3 and Level 4, the system performs the driving task. On Level 3, the driver must take over driving when the system cannot handle it.

On Level 4, the fully automated driving system achieves driverless driving of small vehicles. Automated valet parking will also be required, though this may fall outside the definition of automated driving.

At present, Level 1 and Level 2 active safety technologies, including collision avoidance braking, adaptive cruise control (ACC), and lane keep assist (LKA) on expressways, are spreading.

Level 2 and Level 3 technologies will need to be developed for arterial and general public roads and Level 4 technologies for service cars in operational design domains (ODDs). Our department serves as an R&D team, so our mission is to develop elemental technologies for Level 3 and Level 4 and contribute to the company’s business.

Current Issues in Automated Driving

In research on automated driving, we must address various difficult issues. For example, the sensing performance must be maintained to cope with the backlight at the exit of a tunnel, as well as very poor weather conditions such as heavy rain and dense fog.

Even if the sensing performance is maintained, objects must be detected by the system. Ordinary traffic participants include vehicles, pedestrians, and bicycles. Sometimes there may be unfamiliar objects on the road, and they must be detected. Collision with animals that may enter the road must also be avoided. If a road which was open yesterday is under construction today, it is necessary to make a detour. There are many difficult situations to cope with.

Human drivers can correctly understand the situation based on appropriate judgment, but this is a very difficult problem for fully automated driving.

As I explained, automated driving systems are expected to evolve gradually from simple systems to more complicated systems. Applications will spread from expressways, where it is relatively easy to achieve automated driving, to arterial and general public roads.

There are various challenging issues from the viewpoint of R&D, so this is an exciting field of research.

What Can Be Achieved by Using AI

AI is one solution to address these challenging issues. Here, AI refers to deep learning and machine learning technologies. We have been working to apply AI technologies to vehicles.

You may be familiar with the above figure. Image recognition technologies undergo benchmarking each year. The lower the point on the graph, the higher the accuracy. The recognition accuracy used to be improved only by a few percentage points annually, but deep learning technology, which is one type of AI technology, improved the accuracy by nearly 10%. Deep learning and machine learning technologies have attracted much public attention and many researchers.

Today, the accuracy of image recognition, which is one of the specific tasks, is higher than that of recognition by humans.

We have been working to apply deep learning and machine learning technologies to ADAS/AD.

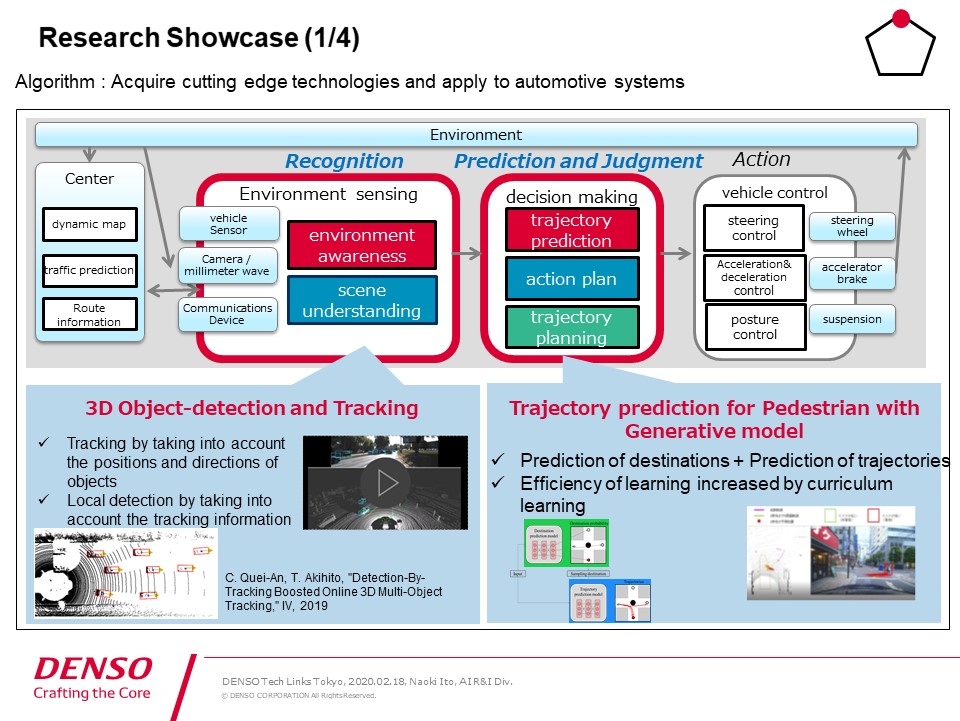

The figure below is a simple block diagram for automated driving. Information obtained from various sensors of a vehicle is used to recognize the surrounding environment and understand the scene. The recognition block outputs the results of recognition of objects, and the trajectory of objects can then be predicted. The vehicle behavior and trajectories are measured by using the prediction results to ensure proper control of the steering, braking, and acceleration.

We are attempting to use deep learning and other AI technologies to predict trajectories and plan paths, in addition to recognition.

DENSO’s Initiatives to Apply AI to Vehicles

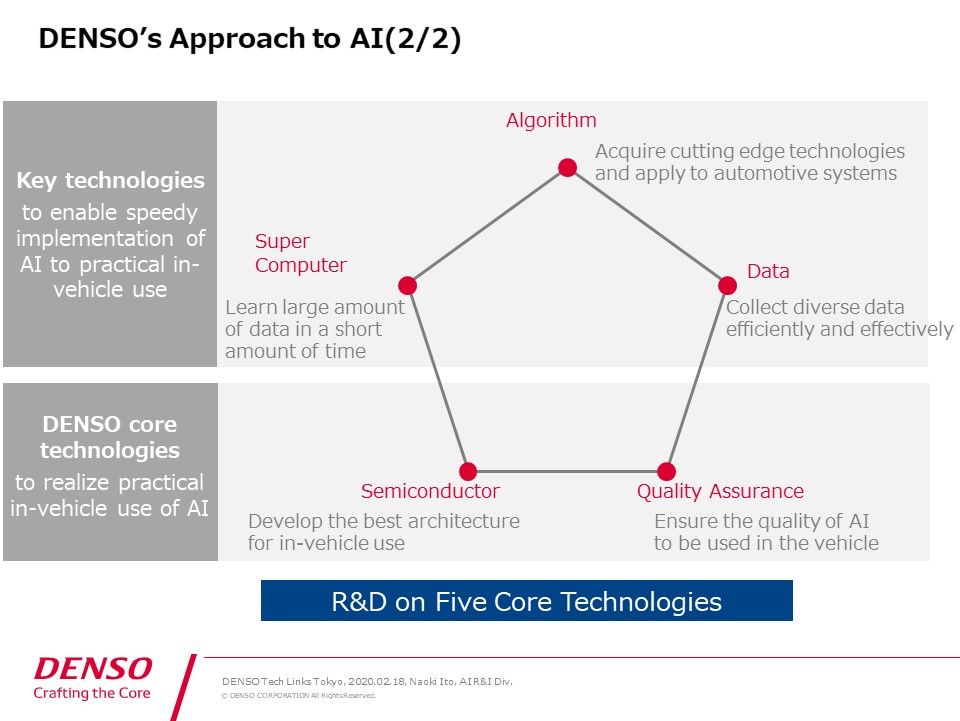

This figure outlines DENSO’s approach to apply AI to vehicles.

Algorithms are the main focus of R&D on AI. To improve the accuracy of AI algorithms, a large amount of data will be required, but the larger the amount of data, the more time the learning process takes. We will need technologies to efficiently use computers for the learning process.

R&D on algorithms and computer technologies is actively being conducted around the world, so I do not believe that DENSO is the global leader in these technologies.

As various technologies and papers are presented around the world, we try to identify technologies that may be used for vehicles, apply them to vehicle systems, and determine their effectiveness. As described here, we are committed to developing technologies to achieve speedy implementation to systems.

Even if there is an algorithm which seems to have a high recognition performance, it may not work in real time in a vehicle system. We develop technologies to quickly attain real-time performance.

These three technologies alone are not enough to apply AI to vehicles. Specifically, the calculation resources in a vehicle are limited. Embedded technologies and semiconductor technologies are also required to operate AI properly.

Quality is a very important factor in vehicle production, so it is essential to assure the quality of AI.

Regarding the bottom two items of the pentagon, we have well-established embedded technologies as well as quality assurance technologies and expertise. It is essential to harness these capabilities and work on the five elemental technologies in a comprehensive manner in order to apply AI to vehicles.

Now, I would like to briefly introduce the development process of respective elemental technologies.

Tracking Algorithms

First, I will talk about algorithms, which are the main topic. The block diagram that I showed earlier is indicated (in the upper part of the slide). First, let’s take a look at the recognition process.

Objects are detected for tracking. Here, tracking refers to the task of detecting moving objects on a video and continuously following their trajectories in each frame.

The technology which is introduced here uses two input sources. One is the images captured by a camera, and the other is the so-called point cloud data obtained from a LiDAR sensor. They are used to detect objects properly to ensure tracking.

If objects are detected properly in each frame of tracking, it is relatively easy to continue tracking while ensuring matching with the objects. But of course, detection is not perfect, and the tracking information is interrupted, resulting in incorrect tracking.

In this algorithm, if objects are not detected properly in the frame, the current position is estimated to some extent by referring to the past tracking information, and detection is performed again. This improves the tracking performance.

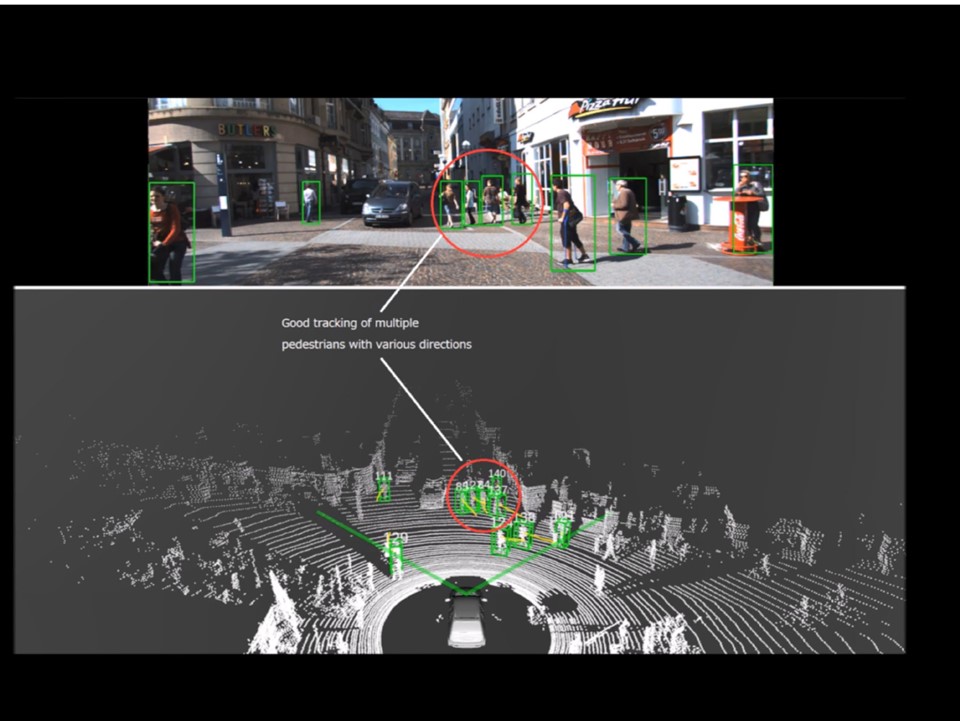

This is a demonstration video using a public dataset named KITTI.

(The video starts.)

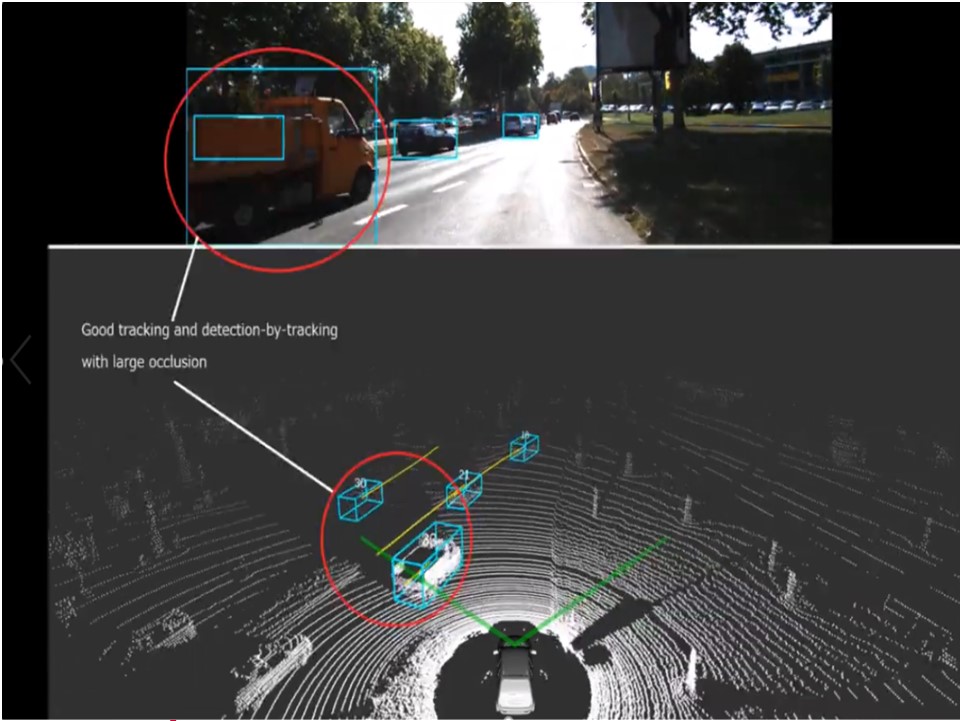

The upper part of the screen shows the image captured by the camera, and the lower part shows the point cloud data obtained from a LiDAR sensor. These two input sources are used to detect pedestrians and vehicles. The tracking trajectory information is indicated by the yellow line.

In the lower part of the screen, the yellow line is indicated all the time. This is the past movement information. Detection and tracking are reasonably stable even in scenes with a relatively large number of pedestrians.

In this scene, the vehicle is moving. In the image captured by the camera, a vehicle behind this truck is invisible. In the point cloud data obtained from a LiDAR sensor, faint points are detected.

The situation in which an object in the back is hidden by another object in the front is referred to as occlusion. Occluded objects can be detected by using past tracking data. Thus, the algorithm enables relatively robust detection.

We gave a presentation about this technology at the Intelligent Vehicles Symposium about a year ago. If you are interested, you can search the web and find the details. We are conducting R&D on such technology.

Trajectory Prediction of Pedestrians

This technology made it possible to identify the positions of objects and obtain information about their past trajectories, so we were then motivated to predict the trajectories.

I would like to introduce our project to predict the trajectories of pedestrians. The detection and tracking results that I mentioned earlier were used as the input sources of the trajectory prediction.

Future trajectories are predicted based on past tracking data. As the first step to predicting a trajectory, we worked on predicting the destinations. This image is small and may be difficult to see, but this (green box on the slide) is the object whose trajectory is to be predicted. It shows a bicycle as an example. Multiple destinations of the bicycle are predicted.

The map information is used for the prediction. It contains simple information including road shapes and building positions. The algorithm predicts multiple destinations based on this information. It identifies the destinations, estimates the paths to reach the destinations, and outputs highly probable paths.

This algorithm uses a generative adversarial network (GAN) model for trajectory prediction and a curriculum learning approach. The curriculum learning approach is used because a comprehensive learning process does not work smoothly. The process of learning the trajectories to the destinations is divided into multiple steps to enable gradual learning.

By using these techniques, the algorithm can properly estimate the trajectories of pedestrians to some extent. The research was jointly conducted with Carnegie Mellon University.

Examples of Trajectory Prediction Results

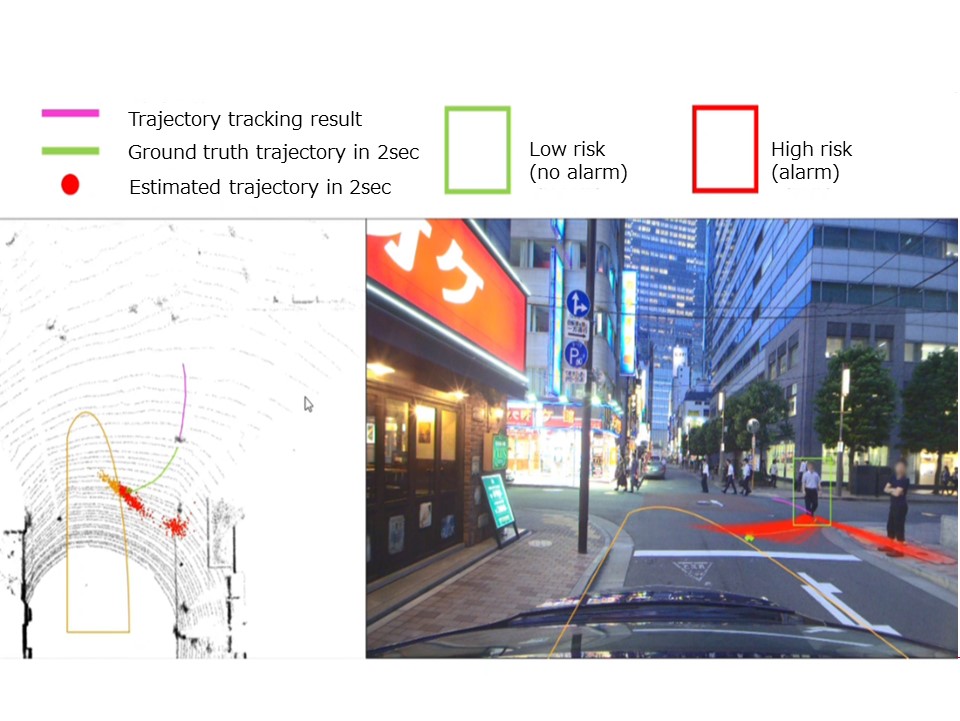

Let me show you a video on the demonstration results of predicting the trajectories of pedestrians. This was not a test of automated driving; the vehicle was driven by a human driver.

We created a pedestrian warning demonstration app. A hazardous area, which can be changed by the setting, is defined around one’s own vehicle. A warning is issued if a pedestrian is likely to enter the hazardous area.

The app uses the information of past trajectory, current pedestrian position, and simple road shape. The position of the target object two seconds later is predicted by using the information of the intersection shape and building position. The image on the right shows a bird’s-eye view, and the image on the left shows a projected result on the image captured by the camera.

The app issues a warning by indicating a red boundary box if the probability of a pedestrian entering the hazardous area defined around one’s own vehicle is higher than a threshold, which also can be changed by the setting. If a pedestrian is unlikely to enter the hazardous area, the pedestrian is indicated by a green boundary box. The green line indicates the true value, and it represents the actual trajectory of the target pedestrian.

Here, the app issues a red warning because the probability of this pedestrian entering the yellow area of the vehicle is high.

This is an interesting example. A warning has not been issued yet, but the app shows the prediction results and indicates two possible directions. Red lines to the left match the true value. In fact, the pedestrian walked to the left.

But at the same time, this pedestrian could possibly walk straight in this scenario, and the app correctly predicted that the pedestrian would walk straight with these downward lines.

As the pedestrian walked to the left, the prediction results converged to the left. Deep learning technology is used in the pedestrian trajectory prediction. This is an overview of our research on algorithms.

Development of a DNN Accelerator

Let me move on to semiconductor development. When a deep learning-based network algorithm is implemented in an in-vehicle computer, the cost of the computer, power consumption, and calculation time will increase. To accelerate such processing we are developing circuits called DNN accelerators.

We design IP core circuits for DNN accelerators. Conceptually, a circuit consists of many small CPUs. It can cope with various networks by switching connections properly, achieving high calculation and processing speeds with low power consumption.

Data Collection Using Free-viewpoint Image Synthesis Technology

Next, I will talk about our efforts to collect data. The AI learning process requires various data. We are working on a project to efficiently generate various data, including edge cases, by using free-viewpoint image synthesis technology.

The image on the right is easy to understand. When a camera is mounted at the center of one’s own vehicle, the image from this angle is captured (while pointing to the above image). From the viewpoint of data collection, images from different angles should be available, including this image (indicated below) which was captured slightly from the left.

However, difficult problems arise when trying to capture such images. The driver must drive on the left or right side of a lane to collect such data.

We have been working to develop a technology for capturing such images efficiently. In this example, 18 cameras arranged in three rows and six columns were mounted on the roof of a data collection vehicle. Many images were captured from different angles, and a technology to compensate images between cameras was used to generate free-viewpoint images.

The free-viewpoint image synthesis technology was difficult to achieve due to various factors, including synchronization and calibration of 18 cameras and storage of a large amount of data.

We work with external partners to develop technologies. In this project, we conducted joint research with the University of Virginia.

This video was created using the free-viewpoint image synthesis technology. The images were captured from behind the vehicle. The technology has made it possible to compensate the images between cameras and change the viewpoint quite smoothly to produce a seamless image.

How to Assure the Quality of In-vehicle AI

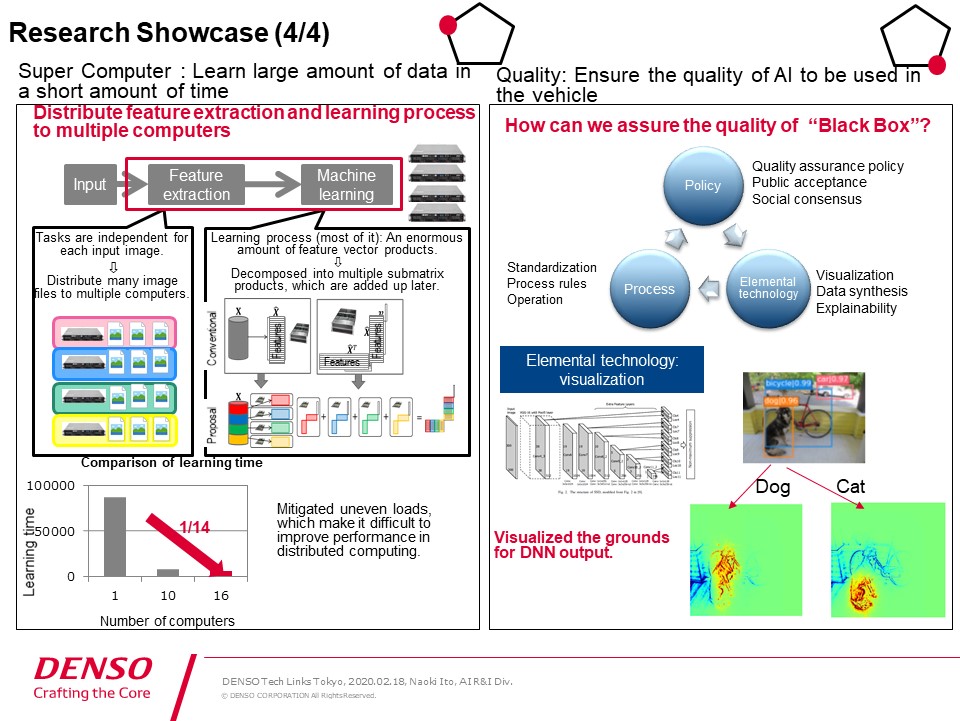

Let’s turn next to computers. (While pointing to the slide) This shows the processing that is used for learning by a machine learning algorithm.

The processing from feature extraction to machine learning takes a long time if a single computer is used. We distribute the learning process using multiple computers to increase efficiency.

However, parallel processing cannot be applied to the entire process. After the input images are loaded, they can be processed by multiple computers because each image is independently processed. However, it is not easy to attain efficient parallel processing for the machine learning process.

Some members are trying to increase the efficiency by performing a matrix decomposition for multiplication of feature vectors to distribute the load. This approach helps take advantage of parallel processing.

The final point to mention is quality. As I stated at the outset, AI algorithms are derived from the learning process, which is why AI is considered to be a black box. With this in mind, we must create solutions to assure quality in the case of in-vehicle applications.

We must formulate a quality assurance policy and reflect the policy in the software development process. It may also be necessary to cultivate public acceptance and consensus in society.

Some standardization may be required. Formulation of in-house software process rules and operationalization must also be promoted. Elemental technologies will be required, in some form or other, to establish a successful process.

We believe that we can assure the quality of AI by developing elemental technologies including visualization and Explainable AI (XAI).

One example of the visualization technologies is simply explained at the bottom (on the right of the slide). This technology visualizes how a detector uses parts of this image to detect a dog. This is still a toy problem.

If we can develop such technologies, we can infer the grounds for judgment made by AI to some extent, making it possible to assure the quality and perform troubleshooting. We are conducting joint research with universities.

DENSO’s Research Resources

On a final note, I will briefly explain our research resources. In the AI R&I Division, we have an test vehicle to collect data for research. We use a vehicle equipped with a LiDAR sensor and camera as well as sensors, some of which are manufactured by the business unit, to collect data for R&D.

Data collection is followed by the process of creating annotations and learning datasets. We use in-house tools in this process as well. In this example, images captured by a camera and point cloud data obtained from a LiDAR sensor are used as the input sources.

The LiDAR sensor is synchronized with the camera, so the images captured by the camera and the point cloud data can be indicated at the same time. The point cloud data enables us to recognize the presence of 3D objects and add 3D boundary boxes indicating the position, attitude, and direction of the objects. We use a tool that automatically projects the 3D boundary boxes on the 2D image in order to create data for our R&D.

After data has been accumulated, the learning process must then be performed. We use computer servers for the learning process to conduct R&D. We implement algorithms derived from the learning process to move this vehicle. Thus, our resources cover the entire research process.

As I mentioned before, there are two types of data: image data and point cloud data. Because they are synchronized, 3D boundary boxes are automatically projected on the 2D image.

This is made possible by assigning IDs for tracking. Depending on the research, only images are used for algorithms. Some members conduct R&D on algorithms that use both point cloud data obtained from a LiDAR sensor and images captured by a camera.

This is an example of our data on expressway, and another is in downtown areas where there are more pedestrians and bicycles. We are collecting data depending on research themes, and conducting R&D.

Through our R&D, we contribute to DENSO’s products and services.

Thank you very much for your attention.